Complete Guide to Veo 3 Audio Generation: How to Add AI Voice and Music to Videos (With Prompt Templates)

1 AM. I stared at the video I just generated with Veo 3—the character’s mouth opening and closing, but the room was silent as a tomb.

To be honest, I was frustrated. I clearly wrote “A woman says: ‘Hello’” in the prompt, but what I got was a beautiful silent film. I tried three more times, and once the dialogue was completely out of sync with the lip movements, like watching a 90s Hong Kong movie dub.

Later, I discovered that 90% of people stumble into this trap when using Veo 3 audio generation for the first time.

When Google unveiled Veo 3 at the 2025 I/O conference, they claimed it would “break the silent era of video generation”—AI could natively generate dialogue, sound effects, and background music with perfect audio-video sync. Sounds amazing, but when you actually use it, you realize Veo 3 won’t automatically fill in audio. You have to explicitly tell it: what sounds you want, who’s speaking, and how they speak.

In this article, I’ll break down the complete logic of Veo 3 audio generation: from dialogue and sound effects to music, providing ready-to-use prompt templates and a troubleshooting checklist for the 5 most common issues. After reading this, you won’t generate silent videos anymore.

Veo 3 Audio Generation Revolution: The End of the Silent Era

What is Native Audio Generation

Traditional AI video generation works like this: first generate visuals, then you have to find voice actors, record audio, add sound effects, mix everything—the whole process takes about 4 hours, according to Promise Studios’ calculations.

Veo 3 compresses this to 3 minutes.

It uses a “dual-stream architecture” (sounds technical, but basically means video and audio generate simultaneously and auto-align). You input a prompt, and the AI creates visuals while adding audio—character lip movements sync naturally with speech, environmental sounds match the scene—rain sounds when it rains, footsteps sound when walking on wooden floors.

But here’s the key: Veo 3’s audio capabilities fall into three categories, and you need to understand them to use it effectively:

1. Dialogue

What characters or narrators say. You can control tone, accent, and emotion.

2. Sound Effects

Specific sounds happening in the scene: phone ringing, water splashing, door creaking open.

3. Ambient Noise

Background sounds that make scenes feel real: city traffic, waves crashing, office AC humming.

Veo 3.1 Audio Upgrade (October 2025)

On October 14, 2025, Google released Veo 3.1 with significantly improved audio quality.

I tested and compared: Veo 3’s dialogue sometimes “floats”—sound and lip movements off by half a beat. Veo 3.1 basically solved this issue, and now supports multi-person dialogue—two characters taking turns speaking without confusion.

Another useful update: previously only “text-to-video” could add audio, but now “ingredients-to-video” (uploading images to generate video) and “frame extension” (lengthening video duration) also support audio.

But to be clear, Veo 3.1’s audio is more like a “first draft.” Community feedback shows audio naturalness reaches 92% of real human recordings, but if you’re doing professional projects, you still need post-production refinement. After all, at $0.75 per second, using it as-is for final product is risky.

Core Audio Prompt Principles: Clarity is Key

Why You Get Silent Videos

When I first started using Veo 3, about 70% of my videos had no sound. Not because the AI was broken, but because my prompts were too vague.

Veo 3 has a design logic: it won’t proactively add audio. If you don’t specify, it assumes you want a silent video.

For example, if you write: “A woman walking in the rain.”

Veo 3 will dutifully generate a woman walking in the rain—but no rain sounds, no footsteps, nothing.

You need to change it to: “A woman walking in the rain. Audio: rain pattering on pavement, footsteps splashing through puddles.”

Then it knows: oh, you want rain and footstep sounds.

Another trap: if you use Veo 3 in Flow, remember to set quality mode to “Highest Quality.” The default preview mode doesn’t generate audio. I got stuck on this the first time, tried a dozen times with no sound, only to discover it was a settings issue.

Three Audio Type Prompt Strategies

Now for the main topic: how to write audio prompts.

Dialogue: Fixed Format for Best Results

The formula is simple: character description + action + quoted dialogue

❌ Wrong example:

“A woman says hello.” (too vague, AI doesn’t know what exactly to say)

✅ Correct example:

“The woman smiles and says, ‘Welcome to Veo 3.’”

To control tone, add emotional modifiers:

- angrily

- nervously

- softly

- excitedly

Complete example:

“The man leans forward and says angrily, ‘Where is my coffee?’”

Sound Effects: Action + Sound Description

These prompts need to be specific about sound details.

❌ Vague example:

“a phone” (AI doesn’t know what the phone does)

✅ Specific examples:

“the sound of a phone ringing”

“water splashing in the background”

“soft house sounds, the creak of a closet door, and a ticking clock”

Here’s a tip: bind sound effects to visual actions using words like “as” or “when.”

“As the door creaks open, a gust of wind rushes in.”

This makes the cause-and-effect relationship between sound and visuals clear.

Ambient Noise: Scene + Layered Background Sounds

Ambient audio needs to describe “layers,” or it sounds flat.

❌ Thin example:

“city sounds” (too generic)

✅ Layered examples:

“the sounds of city traffic and distant sirens” (foreground traffic + background sirens)

“waves crashing on the shore” (main sound effect)

“the quiet hum of an office” (background noise)

Spatial Audio Description Techniques

This is an advanced technique, but really useful.

Human ears have spatial awareness: nearby sounds are clear, distant sounds are muted. Veo 3 understands this too, but you have to tell it.

Use these words to describe spatial relationships:

- in the distance (far away)

- cuts through (penetrates, indicates main sound effect)

- somewhere above (above somewhere)

- faintly (weakly)

- echoing (reverberating)

Complete example (I tested this with great results):

Rain falls steadily onto wet pavement, pattering softly across rooftops and metal bins.

A single, low thunderclap rolls across the sky, echoing faintly between tall buildings.

A car passes faintly in the distance. A dog barks once.

A soft, tense melody plays from an old radio somewhere above.See, this has:

- Foreground main sound: rain hitting the ground

- Midground support: thunder echoing in the distance

- Background accents: car passing, dog barking once

- Atmospheric sound: radio somewhere upstairs

This type of layered audio description, Veo 3 understands very accurately.

Dialogue Generation in Practice: Making Characters Speak

Single-Character Dialogue Best Practices

Dialogue generation is the trickiest part of Veo 3’s audio features. Not technically difficult, just lots of rules.

First iron rule: Keep dialogue short, one line, under 8 seconds.

I tried having characters say long speeches, and either lines got dropped or lip sync drifted. I later found that Veo 3’s sync capability for long dialogue isn’t stable enough. You either split dialogue into multiple segments or keep it to one sentence.

Second rule: Combine emotion + action + speech.

❌ Bland example:

“He says, ‘Did you hear that?’”

✅ Dynamic example:

“He bursts into wild laughter, head thrown back. Mid-laugh, he stops, eyes widening in terror, then whispers softly: ‘Did you hear that?’”

See the difference? The latter includes the emotional transition process: laughter → sudden stop → fear → whisper. Veo 3 can generate this kind of emotional shift, and the effect is remarkably realistic.

Third rule: Character consistency matters.

If you’re generating multiple segments, use the same character description every time. Like “a woman in a red coat with short black hair”—this description must be identical in every prompt, or the AI will generate different characters with different voices.

Multi-Character Dialogue Techniques

Two people speaking simultaneously—this is a nightmare scenario for Veo 3 audio generation.

I tried directly writing dialogue scripts, like this:

Man: "What are you doing?"

Woman: "None of your business."The result was disastrous. Either only one person had sound, or both dialogues didn’t match the visuals.

The correct approach: Don’t write dialogue scripts, write scene flow.

✅ Effective example:

“Inside a cluttered garage, two teenage friends argue over a broken time machine. One leans over the table, frustrated and loud. The other avoids eye contact, mumbling and fiddling with wires. Rain hits the roof, and the lights flicker.”

The logic of this approach: let the AI understand “two people are arguing” rather than telling it “A says this, B says that.” Veo 3 will decide who speaks when and how.

But honestly, multi-character dialogue success rate is still lower than single-character. If you need complex dialogue, I suggest letting only one person speak per segment, then stitch them together in post-production.

Lip Sync Optimization

Lip sync issues are the most common problem in dialogue generation.

Three suggestions:

1. Only one character speaks per segment

I mentioned this before, but it’s worth repeating. Multiple speakers appearing simultaneously makes sync prone to errors.

2. Use explicit “turn-taking” descriptions

If you must have multi-character dialogue, clearly state who speaks first and who speaks next.

“The woman speaks first, then pauses. The man nods and replies.”

3. Add “No subtitles.”

Many people don’t know this detail. Veo 3 sometimes automatically generates subtitles overlaying the scene, covering characters’ mouths. Adding “No subtitles.” disables this feature.

Chinese vs. English Dialogue Differences

Here’s a harsh reality: Chinese dialogue effects are far inferior to English.

I tested about twenty Chinese dialogue prompts, with success rate under 30%. Common issues:

- Dropped lines: wrote three sentences, only generated one

- Dialogue subject confusion: clearly A’s line, but B’s mouth is moving

- Strange accent: Mandarin sounds like a robot

English works much better. Same scene, English prompts have 70%+ success rate.

Workaround: Use English for core dialogue, Chinese for scene descriptions.

For example:

“一个穿红色外套的女人走进咖啡馆,微笑着对服务员说:‘One cappuccino, please.’” (scene in Chinese, dialogue in English)

This way you understand the prompt easily, and dialogue quality doesn’t suffer.

Sound Effects & Music: Creating Immersive Experiences

Layered Sound Design

More sound effects isn’t always better. Piling on too many sounds creates chaos.

My experience: Three layers, with clear priorities.

Foreground — Core action sound effects

This is where audience attention focuses. Door opening, glass shattering, footsteps—these should be clear and loud.

Midground — Supporting environmental sounds

Don’t steal the spotlight from main sound effects, but add realism. Like espresso machine humming in a café, customers chatting quietly.

Background — Atmospheric music

Base layer sound, creating mood. Soft jazz, distant traffic sounds.

Complete example (café scene):

Audio: espresso machine hissing (foreground), soft jazz music (background),

customers chatting quietly (midground). The barista says: "One cappuccino coming right up!"Using parentheses to mark layers helps Veo 3 understand better. Without marking, it sometimes makes background music too loud, drowning out dialogue.

Controlling Background Music Emotion

Background music is the most easily overlooked yet critically important element.

Be specific about music type:

- jazz

- classical

- electronic

- ambient

- upbeat

Emotional modifiers:

- tense

- upbeat

- melancholic

- mysterious

Specific examples:

- “A soft, tense melody plays”

- “Upbeat festival music with steady drums”

A detail many don’t know: you can also control music tempo.

- slow tempo → suitable for sad, reminiscence scenes

- fast tempo → suitable for action, chase scenes

Avoiding Audio Conflicts

This was my biggest mistake.

At first, I thought more sound effects meant more realism. So in a 5-second clip I wrote: rain sounds, thunder, footsteps, traffic, dialogue, background music—6 audio elements total.

The result? The generated video sounded like mush, nothing clear.

Later I learned: Maximum 3-4 audio layers per segment, with clear priorities.

Use volume modifiers to mark priority:

- loud → foreground main sound effect

- soft → background music

- faint → distant environmental sounds

- dominating → core sound effect

Example:

“Loud thunder crashes (dominating). Rain patters softly on the roof (background). A car engine starts faintly in the distance.”

This way Veo 3 knows: thunder is the star, rain provides base, car adds accent.

Troubleshooting: Solutions to 5 Common Audio Problems

Problem 1: Generated Video Has No Sound

This is the most common issue, with about 85% of “silent videos” caused by these three reasons.

Reason 1: Audio not explicitly specified in prompt

Check your prompt—does it have keywords like “Audio:”, “says”, or quoted dialogue? Without them, Veo 3 defaults to silent video.

Solutions:

- Use standalone sentences to describe audio: “Audio includes…”

- Wrap dialogue in quotes: “The man says, ‘Hello.’”

- As a last resort, add: “Please generate this with clear speech.”

Reason 2: Wrong quality mode selected

Flow has two modes: Preview and Highest Quality. Preview mode doesn’t generate audio.

Solution:

Open Flow → Click settings icon → Select “Highest Quality”

Reason 3: Audio description buried in prompt

If you write 300 words describing visuals and only add “with dialogue” at the end, Veo 3 will likely ignore the audio instruction.

Solution:

Move audio instructions forward, place them in the first half of the prompt.

Problem 2: Dialogue and Lip Sync Mismatch

Lips drifting, sound half a beat faster than mouth movements—this issue is especially common in multi-character dialogue.

Root cause: AI gets confused when processing multiple speakers simultaneously.

Solutions:

- Split segments: Only one person speaks per 8-second segment, stitch in post

- Shorten dialogue: Keep each line under 5 seconds

- Use “turn-taking” description: “The woman speaks first, pauses, then the man responds.”

I tested this—single-character dialogue lip sync success rate reaches 80%, multi-character only 40%. If lip sync is critical, don’t force it.

Problem 3: Poor Audio Quality or Unnatural Sound

Sound feels “floaty,” “mechanical,” “robotic.”

Reason: Prompt too vague, lacking voice characteristic descriptions.

For example, you write “A man speaks,” and AI doesn’t know what this man’s voice sounds like. Deep? Sharp? Raspy? Without information, it can only generate an “average male voice.”

Solutions:

Add voice characteristic descriptions

- clear

- raspy

- sharp

- deep

Describe environmental reverb

- indoor reverb

- outdoor, open space

- echoing space

Specify accent and pace (for English dialogue)

- British accent

- slow, deliberate pace

Complete example:

“A man with a deep, raspy voice speaks slowly in an indoor space: ‘Welcome home.’”

Problem 4: Sound Effects Don’t Match Visuals

Like a character clearly walking on wooden floor but sounds like stepping on stone. Or door opening sound comes a second before the visual.

Reason: Sound effect description disconnected from visual scene.

Solution:

Describe visual and audio in the same sentence, connected with causal words.

❌ Separated description:

“A door opens. There is a creaking sound.”

✅ Bound description:

“As the door creaks open, a gust of wind rushes in.”

Use these words to establish cause-and-effect:

- as (when)

- when

- while (during)

- making (producing sound)

Example:

“She walks across the wooden floor, her heels clicking sharply with each step.”

Problem 5: Background Music Drowns Out Dialogue or Sound Effects

This is particularly annoying. You carefully designed dialogue, only to have it completely covered by background music.

Reason: Audio layers and volume relationships unclear.

Solution:

Use volume modifiers to clearly mark who’s the star.

❌ No layering:

“Background music plays. The woman says, ‘Hello.’”

✅ Clear layering:

“Soft background music plays quietly. The woman’s voice cuts through clearly: ‘Hello.’”

Key modifiers:

- soft background music

- loud foreground dialogue

- voice cuts through

- music fades into background

Another tip: if dialogue is important, just skip background music. Simple and brutal, but effective.

Advanced Techniques: Improving Audio Generation Success Rate

Using Veo 3 Prompt Generators

Writing prompts by hand easily misses details. There’s an easier way: use prompt generators.

Two free tool recommendations:

prompt-helper.com/veo-3-prompt-generator

No login required, input scene description, automatically generates complete prompts with audio instructions.Google’s official Veo 3.1 prompt generator

Integrated in Flow editor, automatically suggests audio elements based on your scene.

I now basically use generators as a base for complex scenes, then manually adjust details. Saves a lot of time.

Cost Control Strategies

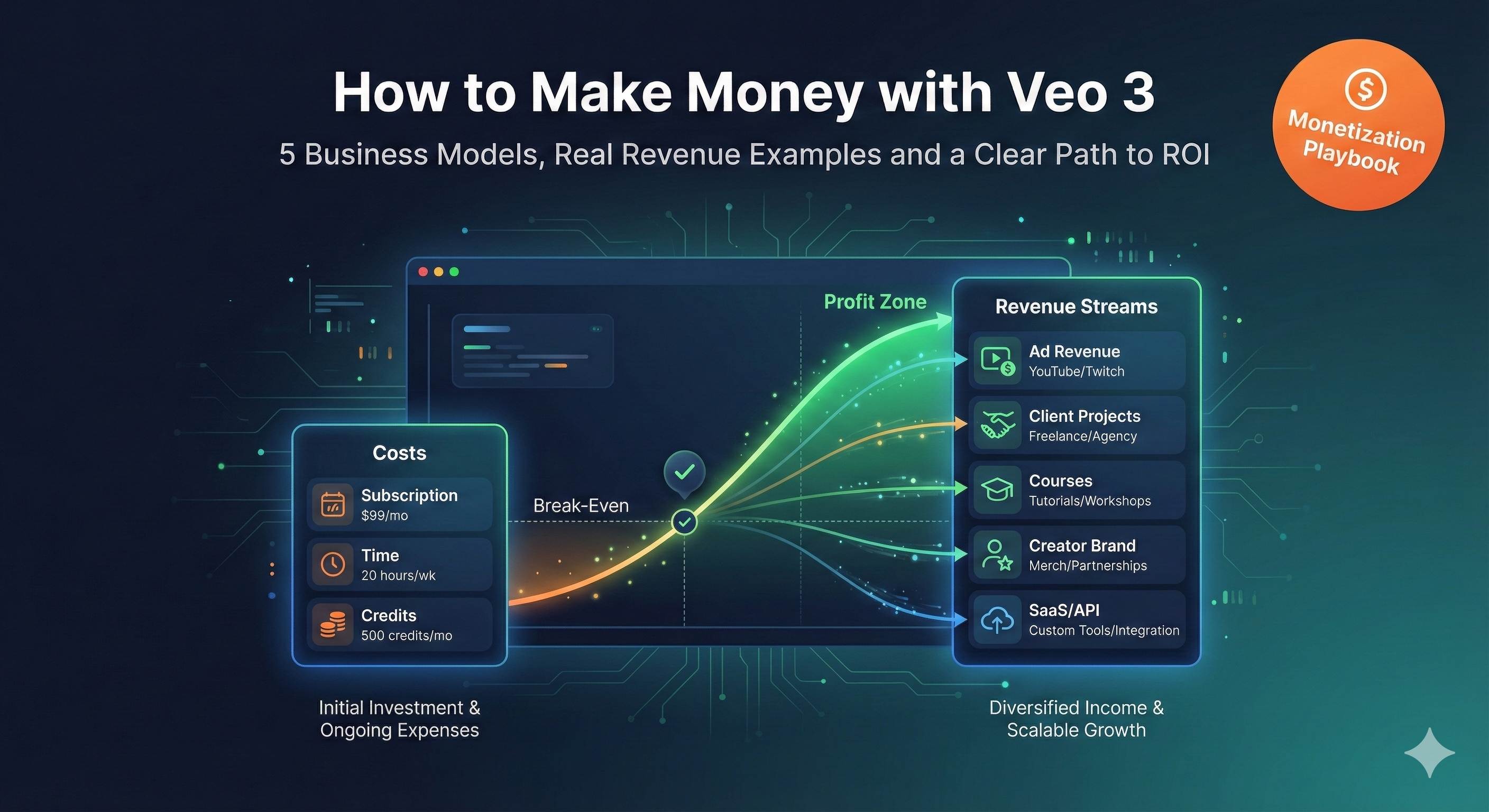

Veo 3’s pricing model: $0.75/second.

An 8-second video costs $6, one minute costs $45. If you test five or six times, money burns fast.

Money-saving tips:

1. Test with low quality mode first

Flow has a “Draft” mode—fast generation, cheaper price, but no audio.

Good for testing composition first, then rendering audio version with Highest Quality after confirmation.

2. Shorten video duration

Don’t jump straight to 60 seconds. Start with 5-8 second test segments, extend duration after audio quality satisfies.

3. Use “Extend” function

Veo 3.1’s Extend function can lengthen existing videos, cheaper than regenerating. And Extend now supports audio continuation too.

Post-Production Adjustments with Flow Editor

Veo 3.1 deeply integrates with Flow, and some audio issues can be fixed in post.

Available post-production adjustments:

- Volume balance: If background music too loud, use Flow’s audio adjustment tools to lower volume

- Segment stitching: Stitch multiple single-character dialogue segments into complete dialogue, more reliable than generating multi-character dialogue directly

- Audio replacement: Keep visuals, replace audio track separately (though somewhat defeats the “native audio” purpose, but it works as救场)

Flow’s “Extend” function is particularly useful:

Generate an 8-second video with audio, then use Extend to lengthen to 15 seconds, audio naturally continues. Much higher success rate than directly generating 15 seconds.

But honestly, Flow’s audio editing capabilities are still pretty basic. For professional-grade audio post-production, you still need to export to Premiere or Final Cut for refinement.

Conclusion

To sum it all up in three sentences:

Explicitly specify audio—Veo 3 won’t fill in blanks, you must tell it what sounds you want.

Layered design—Separate dialogue, sound effects, and background music with clear priorities, don’t pile everything on.

Short sentence principle—Keep dialogue under 8 seconds, one speaker at a time.

Veo 3 audio generation definitely requires multiple tries to get the hang of it. I wasted about 20 test videos before figuring out these patterns. But once you master it, video creation efficiency can multiply—traditional 4-hour voiceover work now takes 3 minutes.

Although Veo 3.1’s Chinese support isn’t good enough yet, and multi-character dialogue sync rate needs improvement, this is already a huge leap for the video generation field. Google says Veo 3 is rapidly iterating, so these issues will likely improve next year.

Take action now:

- Open Veo 3, select Highest Quality mode

- Copy a prompt template from this article, adapt it to your own scene

- Generate your first AI video with audio

If you run into issues, flip back to Chapter 5’s troubleshooting checklist. Audio generation is a skill, but definitely not black magic. Try a few times, you’ll get it.

Published on: Dec 7, 2025 · Modified on: Dec 15, 2025

Related Posts

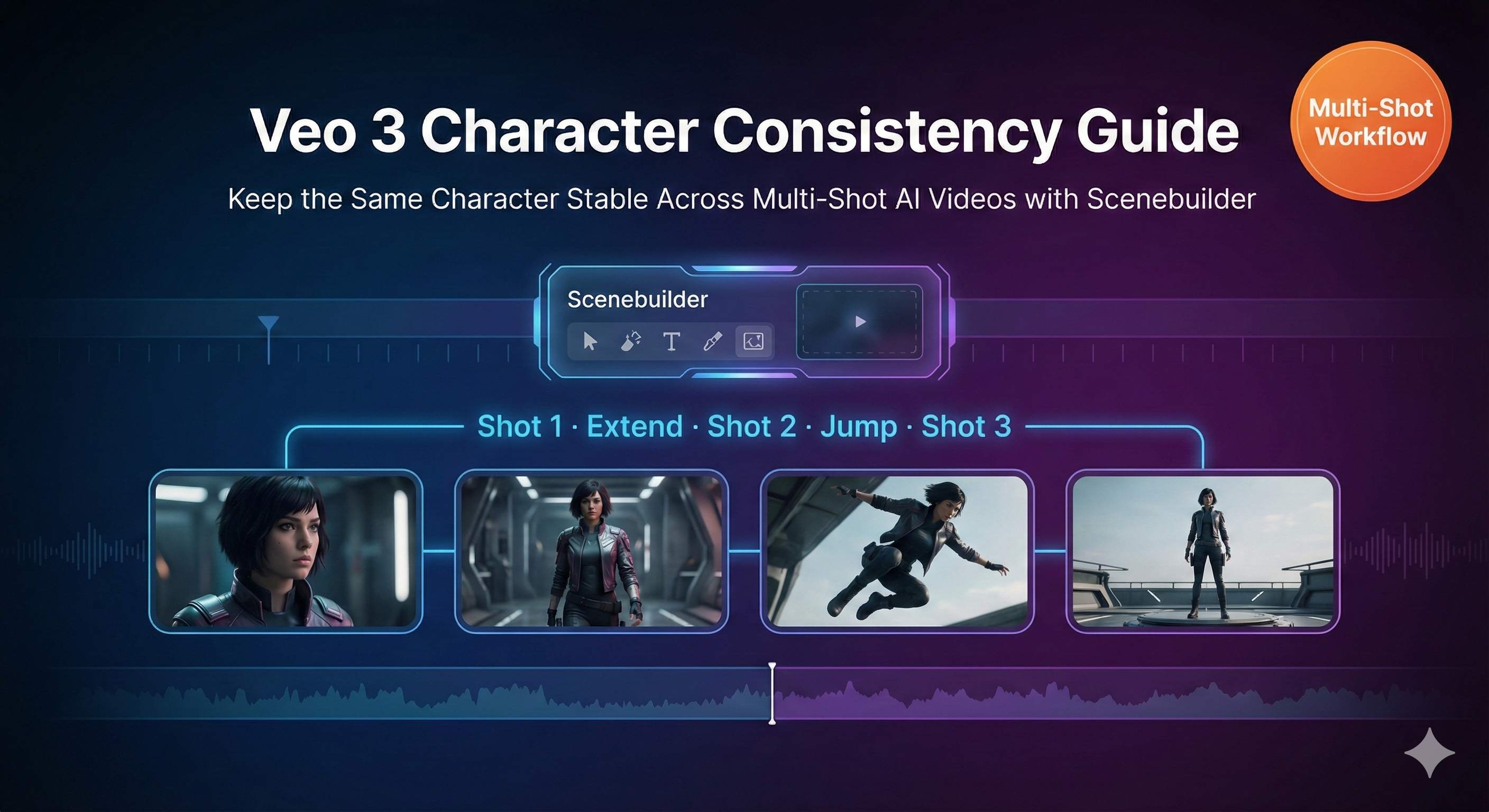

The Complete Guide to Veo 3 Character Consistency: Creating Coherent Multi-Shot Videos with Scenebuilder

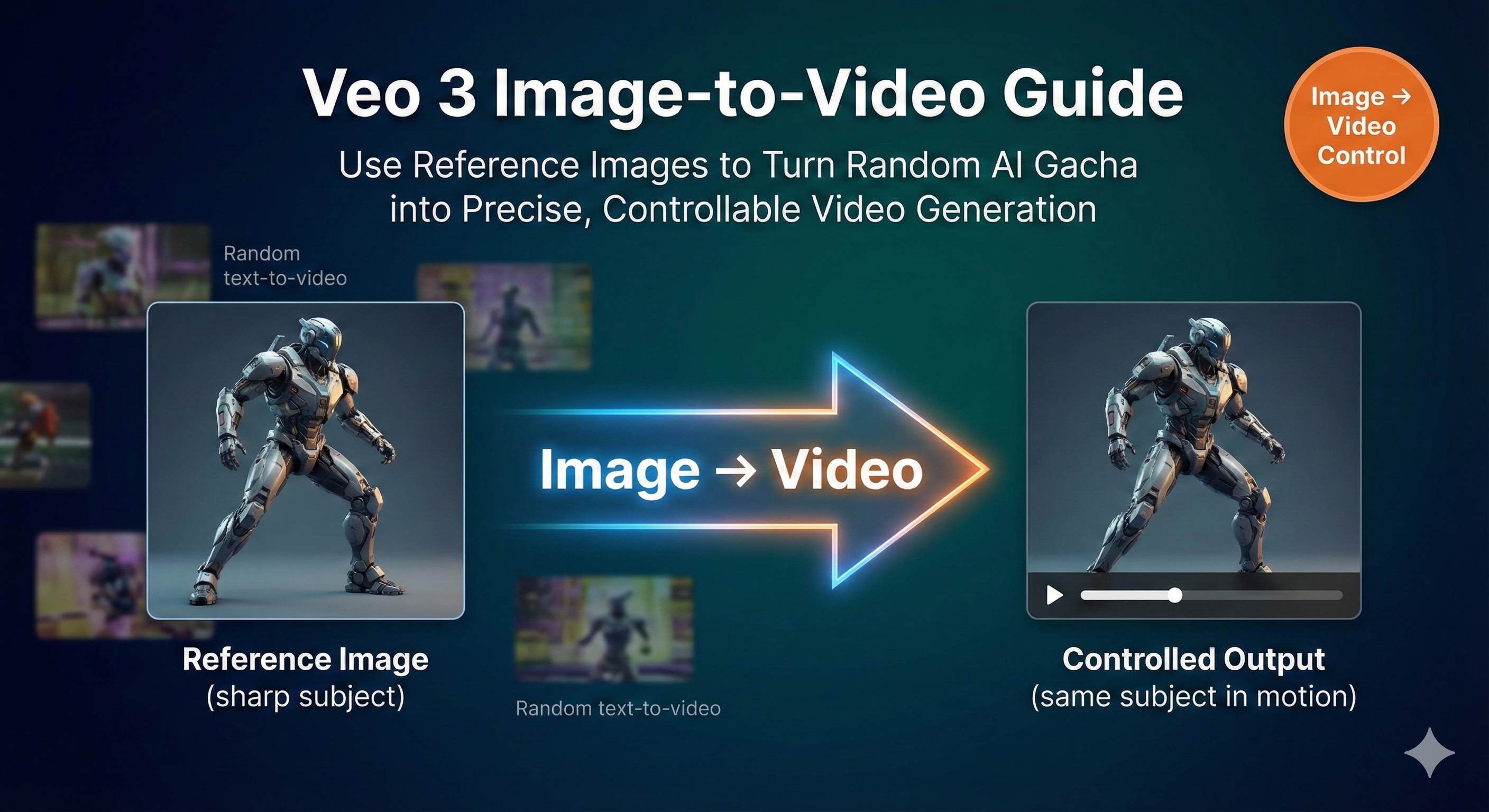

Master Veo 3 Image-to-Video: Using Reference Images for Precise Control

Comments

Sign in with GitHub to leave a comment