Docker Container Exits Immediately? Complete Troubleshooting Guide (Exit Code 137/1 Solutions)

It was Friday night at 8:30 PM. I was about to shut down my computer when my phone buzzed—production alert. Four core service containers had all gone into Exited status.

I opened the terminal and typed docker ps. Empty. Completely empty.

It’s like opening your fridge expecting a drink and finding the whole thing empty. Panic.

To be honest, my first thought was “Great, there goes my weekend.” But after calming down, I realized this wasn’t the first time I’d dealt with container startup failures. It was just more sudden this time, with bigger impact.

After 2+ hours of troubleshooting, I found the issue was actually simple—a misconfigured file path caused database connection failure, making the container exit immediately after starting. If I’d had a systematic troubleshooting process, I could’ve solved it in 10 minutes.

This article is my hard-won guide to diagnosing container startup failures. Whether you’re seeing Exit Code 1, 137, or something else, this method will help you quickly pinpoint the root cause.

Understanding Container Lifecycle and Exit Codes

Before we start troubleshooting, let’s clarify a fundamental question: why do containers exit?

The Nature of Containers: Process Lifecycle

A Docker container is essentially an isolated process. When the process is alive, the container runs; when the process dies, the container exits.

Imagine you start a web server container. The main process might be nginx or node. As long as that process runs, docker ps shows the container. But if the main process exits for any reason—normal completion, crash, or system kill—the container immediately goes into Exited status.

That’s why sometimes docker ps shows nothing, and you need the -a flag to see exited containers.

Exit Code Quick Reference: The Story Behind the Numbers

Each time a container exits, Docker records an exit code. These numbers might seem cryptic, but they’re actually telling you what happened.

Exit Code 0: All good, task completed.

For example, if you run a data import script that finishes successfully, it exits with 0. This isn’t a problem—the container just finished its job.

Exit Code 1: The program had an issue.

This is the most common error code. Could be a misconfigured file, missing dependency, or code bug. Basically, the application inside the container crashed.

I remember deploying a MySQL container once that kept exiting with code 1. After digging through logs, I found I’d accidentally typed a colon instead of an equals sign in the config file. MySQL saw the syntax error and refused to start.

Exit Code 137: Out of memory, or forcibly killed.

This is the code I dread most. 137 usually means one of two things:

- The container exceeded its memory limit, and Linux’s OOM Killer terminated the process

- Someone (or the system) executed

docker killorkill -9

How to tell? Check the OOMKilled field with docker inspect. If it’s true, it’s a memory issue; if false, it was probably manually terminated.

Exit Code 127: Command not found.

Usually means the CMD or ENTRYPOINT in the Dockerfile has a wrong path, or the executable doesn’t exist in the container image.

Exit Code 139: Segmentation fault.

This typically shows up in C/C++ programs, meaning the program accessed memory it shouldn’t have. If you’re not running low-level programs, you’ll rarely see this.

Exit Code Patterns

Exit codes actually follow a pattern:

- 0: Normal exit, no issues

- 1-128: Application problems (app errors, config errors, etc.)

- 129-255: External intervention (killed by signal, terminated by system, etc.)

Understanding these patterns helps you know what type of problem you’re dealing with, giving you direction for troubleshooting.

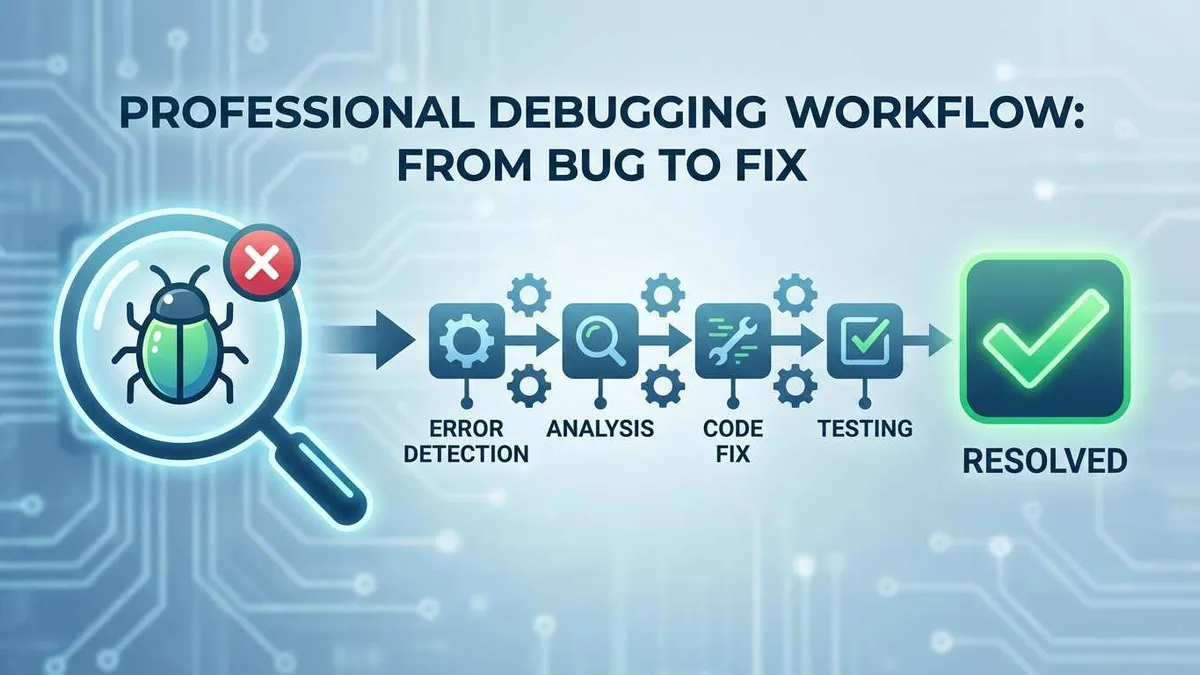

4-Step Diagnosis Method for Quick Problem Location

Now you know what exit codes mean. But knowing meanings isn’t enough—you need to know how to dig out the problem step by step.

I’ve developed a 4-step diagnosis method that covers about 90% of container startup failure scenarios. Follow this process and you’ll find problems aren’t so mysterious after all.

Step 1: Confirm Container Status

Don’t rush to check logs yet. First, confirm the container exists and has actually died.

docker ps -aThis command lists all containers, including exited ones. Pay attention to these key pieces of information:

CONTAINER ID: The container’s unique identifier, needed for subsequent commands. You can copy just the first few characters; Docker will auto-match.

STATUS column: This is the key. Running containers show Up X minutes, exited containers show Exited (code) X minutes ago.

For example:

CONTAINER ID IMAGE STATUS

a1b2c3d4e5f6 mysql:8.0 Exited (1) 2 minutes agoExit code 1 suggests an application layer problem. If it’s 137, likely a memory issue.

Note creation and exit times. If the container exits less than 1 second after creation, it’s probably a startup command or config issue. If it ran for a while before exiting, it might be resource shortage or dependency failure.

Step 2: Check Container Logs

This is the most critical step. Containers usually leave clues before exiting, and those clues are in the logs.

Basic viewing:

docker logs <container_id>This shows all standard output and standard error from the container. Often you’ll directly see error messages like Permission denied, No such file or directory, Connection refused, etc.

Real-time tracking (good for troubleshooting startup process):

docker logs -f <container_id>If you want to see what happens during container startup, use -f. It works like tail -f, showing new logs in real-time. Though this is less useful for already-exited containers, it’s great when trying to restart.

View recent logs only:

docker logs --tail 100 <container_id>If container logs are too long, just check the last 100 lines. Often the last few lines reveal the problem.

Add timestamps:

docker logs -t <container_id>The -t flag adds timestamps to each log line, helping you determine exactly when the problem occurred.

Filter error logs:

docker logs <container_id> 2>&1 | grep -i errorIf there are too many logs, just look for lines containing “error”. This quickly pinpoints critical errors.

Step 3: Check Container Configuration

Sometimes logs don’t reveal much. That’s when you need to dive deeper into the container’s config and state.

View full configuration:

docker inspect <container_id>This outputs a ton of JSON information, including all container config, environment variables, mount points, network settings, etc. It’s a lot of information, but very useful.

Quickly check specific information:

Check exit code:

docker inspect --format '{{.State.ExitCode}}' <container_id>Check if OOM killed:

docker inspect --format '{{.State.OOMKilled}}' <container_id>If output is true, it’s definitely a memory problem.

Check environment variables:

docker inspect --format '{{.Config.Env}}' <container_id>Sometimes environment variables are misconfigured—database connection strings, API keys, etc.

Check mount paths:

docker inspect --format '{{.Mounts}}' <container_id>Verify that config files and data directories are mounted correctly.

Check log file path:

docker inspect --format='{{.LogPath}}' <container_id>If docker logs doesn’t work, you can directly find the log file on the host.

Step 4: Interactive Startup Verification

If you’ve completed the first three steps and still haven’t located the problem, you need to get inside the container yourself.

Start container interactively:

If your original startup command was:

docker run -d my-appChange -d to -it to run the container in the foreground:

docker run -it my-appThis lets you see all output during container startup in real-time. Many errors will display directly on screen.

Manually enter container:

If the container starts and immediately exits, you can use a shell to enter and manually execute commands:

docker run -it my-app /bin/bashOr:

docker run -it my-app /bin/shOnce inside, you can:

- Check if config files exist:

ls /etc/app/config.yaml - Test config file syntax: like MySQL’s

mysqld --verbose --helpvalidates config - Manually run the startup command to see specific errors

- Check dependency service connectivity:

ping database,telnet redis 6379

This method is especially good for troubleshooting path issues, permission issues, and dependency issues.

I know this seems like a lot of steps. But trust me, in practice, most problems are solved in step 2 when checking logs. Only tricky edge cases require all four steps.

5 Common Failure Scenarios and Solutions

Now that we’ve covered troubleshooting methods, let’s look at real-world scenarios. I’ve categorized them into five types that cover most daily issues.

Scenario 1: Config File Errors or Missing Paths

Typical symptoms:

- Exit Code 1

- Logs show

No such file or directory,config file not found,syntax error, etc.

Real case:

Once I deployed a Node.js app and the container wouldn’t start. Logs showed:

Error: ENOENT: no such file or directory, open '/app/config/prod.json'After checking, I found I’d written the mount path in the docker run command as:

-v /home/user/config:/app/conf # Notice it's "conf"But the app was reading from /app/config. One letter difference, and the app couldn’t find the config file, causing startup failure.

Troubleshooting method:

- Use

docker inspect --format '{{.Mounts}}'to check mount paths - Enter the container and

lsto verify files are actually at that location - If it’s config file syntax error, most apps will specify which line in the logs

Solutions:

Wrong mount path:

# Wrong example

docker run -v /host/path:/wrong/path my-app

# Correct approach

docker run -v /host/path:/app/config my-appConfig file syntax error:

- For YAML files, use online tools or

yamllintto check syntax - For JSON files, use

jqto validate:jq . config.json - For MySQL config, execute

mysqld --verbose --helpin the container to check for syntax errors

Scenario 2: Out of Memory (OOM Killed)

Typical symptoms:

- Exit Code 137

docker inspect --format '{{.State.OOMKilled}}'returnstrue- Logs might show

Cannot allocate memory,Out of memory, etc.

Real case:

I had a Java app that ran fine in local dev environment, but kept restarting when deployed to test server. Checking logs:

OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory failed; error='Cannot allocate memory' (errno=12)Turned out Docker Desktop on the test server only had 512MB memory limit, but this Java app needed 600MB just to start.

Troubleshooting method:

# Confirm OOM

docker inspect --format '{{.State.OOMKilled}}' <container_id>

# Check host memory

free -h

# Check container runtime memory usage

docker stats <container_id>Solutions:

Increase container memory limit:

docker run -m 1g my-app # Limit max memory to 1GB

docker run -m 512m --memory-swap 1g my-app # Also set swapIf using Docker Desktop, adjust in settings:

- macOS: Docker Desktop → Preferences → Resources → Memory

- Windows: Docker Desktop → Settings → Resources → Memory

Optimize the application itself:

- Java apps can limit JVM heap size:

java -Xmx512m -jar app.jar - Node.js can set:

node --max-old-space-size=512 app.js - Check code for memory leaks

Production environment recommendations:

- Set reasonable memory limits based on actual app needs

- Configure

--memory-reservationfor soft limits - Monitor memory usage trends, scale up proactively

Scenario 3: Port Conflicts

Typical symptoms:

- Exit Code 1

- Logs show

port is already allocated,address already in use,bind: address already in use

Real case:

Monday morning at the office, I ran docker-compose up and the Nginx container wouldn’t start. Error message:

Error starting userland proxy: listen tcp4 0.0.0.0:80: bind: address already in useTurned out I’d tested a local Nginx before leaving Friday and forgot to shut it down. Port 80 was occupied, so the new container couldn’t start.

Troubleshooting method:

Check port usage (Linux/macOS):

lsof -i :8080

netstat -tuln | grep 8080Check port usage (Windows):

netstat -ano | findstr 8080Check other containers’ port mappings:

docker ps --format "table {{.Names}}\t{{.Ports}}"Solutions:

Option 1: Change mapped port

# Original command

docker run -p 8080:8080 my-app

# Change to different port

docker run -p 8081:8080 my-appOption 2: Stop service using the port

# Find process ID

lsof -i :8080

# Kill process

kill -9 <PID>Option 3: If another container is using it, stop that one first

docker stop <conflicting_container>Important note: If you use --network=host mode, the container directly uses the host network, increasing port conflict probability. In this mode, container ports must not conflict with host ports.

Scenario 4: Insufficient Permissions

Typical symptoms:

- Exit Code 1

- Logs show

Permission denied,Operation not permitted,chown: changing ownership failed

Real case:

Deployed a MongoDB container, mounting data directory to host. Container kept failing to start:

chown: changing ownership of '/data/db': Permission deniedTurned out the host directory I mounted belonged to root user, but MongoDB process in the container ran as mongodb user (UID 999), without write permission to that directory.

Troubleshooting method:

Check host directory permissions:

ls -la /host/data/pathCheck user inside container:

docker run -it my-app /bin/bash

whoami

idCheck SELinux (CentOS/RHEL):

getenforce # Check SELinux statusSolutions:

Option 1: Adjust host directory permissions

# Give all users read/write (not secure, dev environment only)

chmod 777 /host/data/path

# Safer approach: change owner

chown -R 999:999 /host/data/path # 999 is container user's UIDOption 2: Use privileged mode (use with caution)

docker run --privileged=true my-appNote: Privileged mode gives the container almost all host permissions. Security risk. Not recommended for production.

Option 3: Specify run user

docker run --user 1000:1000 my-app # Use host UID/GIDOption 4: Handle SELinux issues

# Method 1: Add Z label (modify host file label)

docker run -v /host/path:/container/path:Z my-app

# Method 2: Add z label (shared label)

docker run -v /host/path:/container/path:z my-app

# Method 3: Temporarily disable SELinux (not recommended for production)

setenforce 0Scenario 5: Dependent Services Not Ready

Typical symptoms:

- Exit Code 1

- Logs show database connection failure, Redis connection timeout, etc.

Connection refused,ECONNREFUSED,could not connect to server

Real case:

Used docker-compose to deploy a microservice stack with an app container depending on MySQL database. Both containers started almost simultaneously, but the app container always failed:

Error: connect ECONNREFUSED 172.18.0.2:3306The issue: although the MySQL container started, the MySQL service was still initializing and wasn’t ready to accept connections. The app container started too fast, connection failed, then exited.

Troubleshooting method:

Check if dependent services are running:

docker ps # Check if dependency containers are runningTest network connectivity:

docker exec my-app ping database

docker exec my-app telnet database 3306

docker exec my-app nc -zv database 3306Check Docker network config:

docker network ls

docker network inspect <network_name>Solutions:

Option 1: Use docker-compose health checks and depends_on

version: '3.8'

services:

database:

image: mysql:8.0

healthcheck:

test: ["CMD", "mysqladmin", "ping", "-h", "localhost"]

interval: 10s

timeout: 5s

retries: 5

app:

image: my-app

depends_on:

database:

condition: service_healthy # Wait for database health check to passOption 2: Add retry logic in application layer

Add connection retry logic in application code:

// Node.js example

async function connectWithRetry(maxRetries = 5) {

for (let i = 0; i < maxRetries; i++) {

try {

await db.connect();

console.log('Database connected');

return;

} catch (err) {

console.log(`Connection failed, retrying... (${i+1}/${maxRetries})`);

await new Promise(resolve => setTimeout(resolve, 5000));

}

}

throw new Error('Failed to connect to database');

}Option 3: Use startup wait script

Add a wait script before container startup, like wait-for-it.sh:

# In Dockerfile

COPY wait-for-it.sh /usr/local/bin/

RUN chmod +x /usr/local/bin/wait-for-it.sh

# Use when starting

CMD ["wait-for-it.sh", "database:3306", "--", "node", "app.js"]Option 4: Configure restart policy

Let container auto-retry on failure:

docker run --restart=on-failure:3 my-app # Max 3 retries on failureIn docker-compose:

services:

app:

restart: on-failureThese solutions can be combined—health checks + application retry + restart policy for triple insurance.

Preventive Measures and Best Practices

Everything above is about fixing problems after they occur. But actually, if you configure certain mechanisms from the start, many problems won’t happen at all, or can auto-recover when they do.

Configure Health Checks (HEALTHCHECK)

Health checks are Docker’s container self-diagnostic mechanism. By periodically running check commands, Docker can determine if a container is actually working properly, not just that the process is alive.

Configure in Dockerfile:

FROM nginx:alpine

# Check every 30 seconds, timeout 3 seconds, mark unhealthy after 3 consecutive failures

HEALTHCHECK --interval=30s --timeout=3s --retries=3 \

CMD curl -f http://localhost/ || exit 1For web services, check HTTP endpoints:

HEALTHCHECK --interval=30s --timeout=5s --start-period=40s \

CMD curl -f http://localhost:8080/health || exit 1For databases, use dedicated commands:

# MySQL

HEALTHCHECK CMD mysqladmin ping -h localhost || exit 1

# PostgreSQL

HEALTHCHECK CMD pg_isready -U postgres || exit 1

# Redis

HEALTHCHECK CMD redis-cli ping || exit 1Health check benefits:

- Kubernetes/Swarm orchestrators automatically restart or reschedule containers based on health status

- docker-compose depends_on can wait for services to be truly healthy before starting dependent containers

- Monitoring systems can alert based on health status

View health status:

docker ps # STATUS column shows health status

docker inspect --format='{{.State.Health.Status}}' <container_id>Set Restart Policies

Restart policies let containers auto-recover from failures without you manually restarting at 3 AM.

Docker provides four restart policies:

no (default): Don’t auto-restart

docker run --restart=no my-appSuitable for one-time tasks, containers that finish and exit.

on-failure[:max-retries]: Only restart on abnormal exit

docker run --restart=on-failure:5 my-app # Max 5 retriesSuitable for services that might fail but you don’t want infinite retries. Note: only restarts when Exit Code is non-zero.

always: Always restart

docker run --restart=always my-appSuitable for long-running services like web servers, API services. Even if manually stopped, it’ll auto-start when Docker Daemon restarts.

unless-stopped: Always restart unless manually stopped

docker run --restart=unless-stopped my-appSimilar to always, but if you manually docker stop, it won’t auto-start when Docker Daemon restarts. This is my most-used policy—gives me some control.

Important notes:

- 10-second rule: Container must run at least 10 seconds after first start for restart policy to work. This prevents infinite restart loops from config errors eating system resources.

- Infinite restart trap: If container keeps restarting due to config error (like port conflict), logs will explode. Remember to use with log rotation.

For already-running containers, you can dynamically modify policy:

docker update --restart=unless-stopped <container_id>Configure in docker-compose:

services:

web:

image: nginx

restart: unless-stopped # Recommended for production

worker:

image: my-worker

restart: on-failure # Might fail, but don't want infinite retriesLog Management: Prevent Disk Full

Docker by default saves all container logs in JSON files. Over time these log files can consume tens of GB of disk space. I’ve experienced production server outages because Docker logs filled up the disk.

Configure log rotation (recommended):

Create or edit /etc/docker/daemon.json:

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m", // Max 10MB per log file

"max-file": "3" // Keep max 3 log files

}

}Restart Docker after modification:

sudo systemctl restart dockerThis way each container uses max 30MB log space (10MB × 3), old logs auto-delete.

Configure for individual container:

docker run --log-opt max-size=10m --log-opt max-file=3 my-appIn docker-compose:

services:

app:

image: my-app

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"Other log driver options:

- syslog: Send to system log

- journald: Use systemd’s journal

- fluentd: Send to Fluentd for centralized management

- none: Don’t log (not recommended)

Check container log file location and size:

docker inspect --format='{{.LogPath}}' <container_id>

du -h $(docker inspect --format='{{.LogPath}}' <container_id>)Monitoring and Alerts: Detect Problems Early

Don’t wait until containers crash to find out. Proactive monitoring prevents many production incidents.

Basic monitoring: docker stats

docker stats # Real-time display of all containers' resource usage

docker stats <container_id> # Monitor specific containerThis command shows real-time data on CPU, memory, network IO, disk IO. If you see memory usage continuously growing, there might be a memory leak—handle it proactively.

Production environment: Prometheus + Grafana

More professional approach is using Prometheus to collect metrics, Grafana to visualize:

# docker-compose.yml

services:

prometheus:

image: prom/prometheus

volumes:

- ./prometheus.yml:/etc/prometheus/prometheus.yml

ports:

- "9090:9090"

grafana:

image: grafana/grafana

ports:

- "3000:3000"

cadvisor: # Collect container metrics

image: google/cadvisor

volumes:

- /:/rootfs:ro

- /var/run:/var/run:ro

- /sys:/sys:ro

- /var/lib/docker/:/var/lib/docker:ro

ports:

- "8080:8080"Configure alert rules for conditions like memory usage over 80%, excessive container restarts, etc., to auto-send notifications.

Simple and crude: Scheduled script

If you think Prometheus is too complex, write a simple script:

#!/bin/bash

# check-containers.sh

# Check for any Exited status containers

EXITED=$(docker ps -a -f "status=exited" --format "{{.Names}}")

if [ -n "$EXITED" ]; then

echo "Warning: The following containers are exited:"

echo "$EXITED"

# Can send email or push notification here

fiAdd to crontab to run every 5 minutes:

*/5 * * * * /path/to/check-containers.shProduction Environment Configuration Checklist

Finally, here’s a production environment configuration checklist. Follow this and you’ll avoid most big problems:

version: '3.8'

services:

web:

image: my-web-app:latest

# Restart policy

restart: unless-stopped

# Resource limits

deploy:

resources:

limits:

cpus: '2'

memory: 1G

reservations:

memory: 512M

# Health check

healthcheck:

test: ["CMD", "curl", "-f", "http://localhost/health"]

interval: 30s

timeout: 5s

retries: 3

start_period: 40s

# Log management

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

# Environment variables (use secrets for sensitive info)

environment:

- NODE_ENV=production

# Port mapping

ports:

- "8080:8080"

# Dependencies

depends_on:

database:

condition: service_healthy

database:

image: postgres:14

restart: unless-stopped

healthcheck:

test: ["CMD-SHELL", "pg_isready -U postgres"]

interval: 10s

timeout: 5s

retries: 5

volumes:

- db-data:/var/lib/postgresql/data

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

volumes:

db-data:With this config, crashed containers auto-restart, resource overages get limited, logs won’t fill disk, and monitoring provides early warning. You can sleep soundly.

Conclusion

After all this, I hope you remember: Docker container startup failures aren’t scary. What’s scary is not having a systematic troubleshooting approach.

Let’s recap the core content:

Understand exit codes: See 137, think memory problem. See 1, think config or dependency problem. Exit codes are clues Docker leaves you—don’t ignore them.

4-step diagnosis:

- Check container status (

docker ps -a) - Check logs (

docker logs) - Check configuration (

docker inspect) - Interactive verification (

docker run -it)

90%+ of problems are solved in step 2.

5 common scenarios: Config errors, memory shortage, port conflicts, permission issues, dependencies not ready. Remember these troubleshooting methods and you’re covered for most cases.

Prevent proactively: Configure health checks, set restart policies, manage logs well, monitor properly. These mechanisms make containers more stable and auto-recover when problems occur.

Finally, here’s a quick troubleshooting checklist you can screenshot:

Docker Container Startup Failure Troubleshooting Checklist

□ Step 1: docker ps -a check container status and exit code

□ Step 2: docker logs <container_id> check detailed logs

□ Step 3: docker inspect <container_id> check configuration

□ Step 4: docker run -it <image> interactive verification

Quick problem location for common issues:

- Exit Code 1 + "No such file" → Check mount paths and config files

- Exit Code 1 + "port already allocated" → Check port conflicts

- Exit Code 1 + "Permission denied" → Check file permissions and SELinux

- Exit Code 1 + "Connection refused" → Check if dependency services are ready

- Exit Code 137 + OOMKilled=true → Increase memory limit

- Exit Code 127 → Check CMD/ENTRYPOINT path correctness

Preventive measures:

□ Configure HEALTHCHECK

□ Set restart policy (recommend unless-stopped)

□ Configure log rotation (max-size + max-file)

□ Resource limits (-m memory limit)

□ Monitoring alerts (docker stats or Prometheus)If this article helped you, bookmark it for next time you encounter container startup issues. If you’ve encountered any special container startup problems, feel free to share in the comments—might help others.

May your containers always be Up and Running, and may you never receive a “container crashed” alert on Friday night!

FAQ

Why does my Docker container exit immediately after starting?

Common causes:

• Misconfigured paths

• Missing dependencies

• Port conflicts

• Memory limits (OOM)

• Wrong entrypoint

• Application errors

Check exit code and logs: `docker ps -a` and `docker logs container`.

What does Exit Code 137 mean?

Check `docker inspect` for OOMKilled=true.

Solution: increase memory limit with `--memory` flag or fix memory leak in application.

What does Exit Code 1 mean?

Common causes:

• Misconfigured file paths

• Missing dependencies

• Connection failures

• Permission issues

Check logs: `docker logs container` to see specific error message.

How do I troubleshoot container startup failures?

1) Check exit code: `docker ps -a`

2) View logs: `docker logs container`

3) Inspect config: `docker inspect container`

4) Test interactively: `docker run -it --entrypoint sh image`

90% of problems solved in step 2.

How do I debug a container that exits immediately?

Check if files exist, test commands manually, verify configuration.

This helps identify what's wrong with normal startup.

What are common container startup failure scenarios?

1) Config errors (wrong paths)

2) Memory shortage (OOM)

3) Port conflicts

4) Permission issues

5) Dependencies not ready (database not started)

Each has specific troubleshooting steps.

How do I prevent containers from crashing?

1) Health checks (HEALTHCHECK)

2) Restart policy (unless-stopped)

3) Log rotation (max-size, max-file)

4) Resource limits (--memory, --cpus)

5) Monitoring alerts

These help containers auto-recover and prevent issues.

14 min read · Published on: Dec 18, 2025 · Modified on: Feb 5, 2026

Related Posts

AI Keeps Writing Wrong Code? Master These 5 Prompt Techniques to Boost Efficiency by 50%

AI Keeps Writing Wrong Code? Master These 5 Prompt Techniques to Boost Efficiency by 50%

Cursor Advanced Tips: 10 Practical Methods to Double Development Efficiency (2026 Edition)

Cursor Advanced Tips: 10 Practical Methods to Double Development Efficiency (2026 Edition)

Complete Guide to Fixing Bugs with Cursor: An Efficient Workflow from Error Analysis to Solution Verification

Comments

Sign in with GitHub to leave a comment