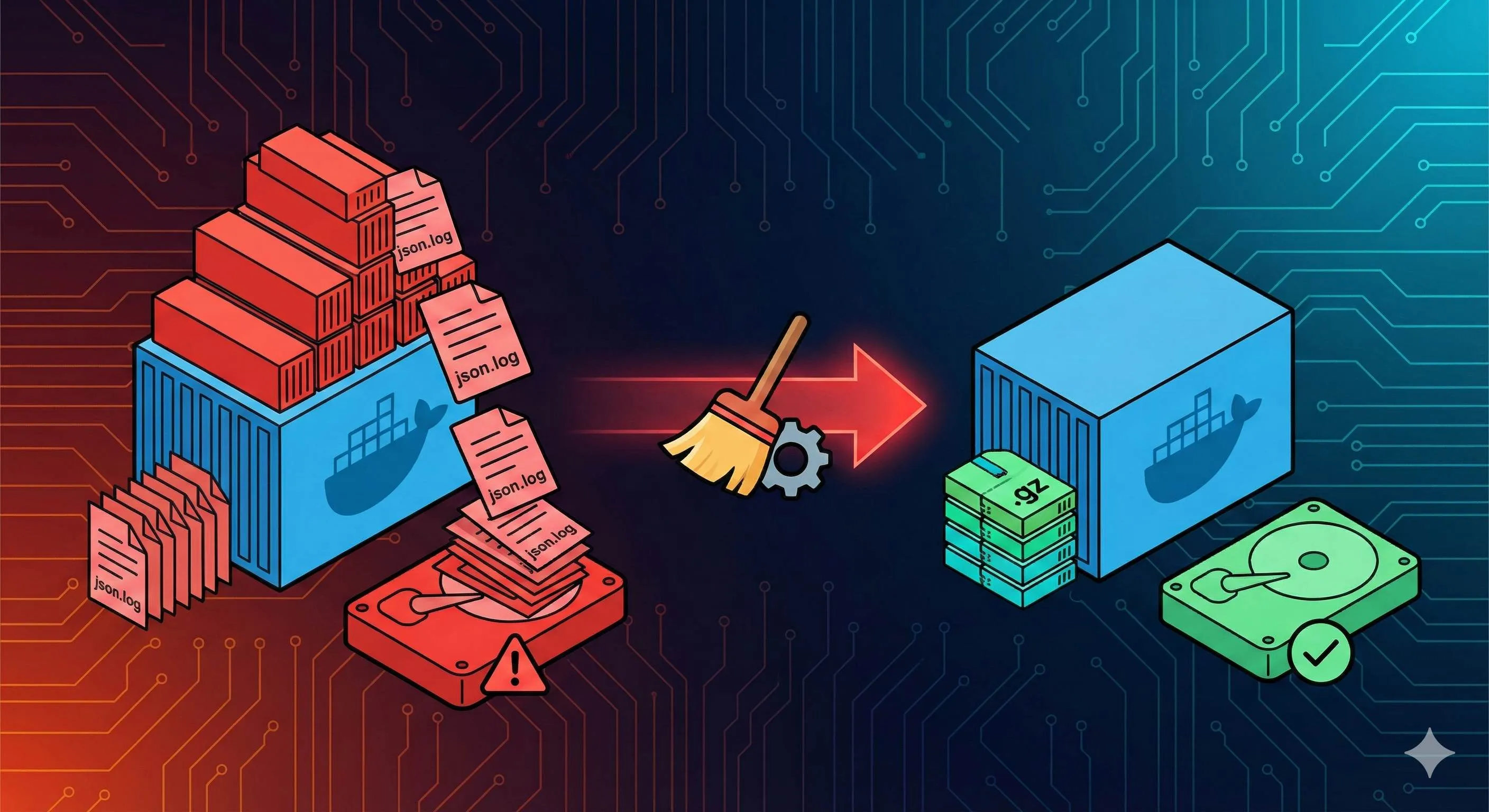

Complete Guide to Docker Log Cleanup: 5 Ways to Prevent Disk from Being Filled by json.log

3:17 AM. The phone vibration jolted me out of light sleep.

A glaring red alert on the screen: “Production server disk usage 100%, all services stopped responding.” My heart sank 鈥?this was an e-commerce platform serving hundreds of thousands of users, and every minute of downtime meant real money lost.

I immediately SSH’d into the server. df -h confirmed the root partition was indeed full. After checking everything, I found the culprit in /var/lib/docker/containers/: a container’s xxx-json.log file was an incredible 82GB!

To be honest, I was stunned. The container was running fine 鈥?how did the logs balloon like this?

Later I learned this wasn’t an isolated case. Docker doesn’t limit log file size by default. All stdout and stderr output gets written to the json.log file, then it just keeps growing, growing, and growing until it fills up your disk.

If you’re also struggling with Docker logs, don’t worry. This article will teach you: how to emergency clean large log files, how to configure log rotation to prevent recurrence, and how to choose the right log driver. I’ve compiled all the pitfalls I’ve encountered to help you avoid them.

Why Docker Logs Fill Up Your Disk

Docker’s Log Storage Mechanism

Let’s talk about how Docker handles logs.

When your container outputs content to stdout and stderr through console.log(), System.out.println(), or any other means, Docker writes all of it to a JSON-formatted log file. This file is located at /var/lib/docker/containers/<container-id>/<container-id>-json.log.

Sounds normal, right? The problem is 鈥?Docker doesn’t do any log rotation by default.

What does that mean? This log file just keeps growing, growing, and growing, never automatically splitting or deleting old logs. Your container runs for a day: 1GB. A week: 7GB. A month: 30GB. If your application is particularly chatty (like with log level set to DEBUG), the growth rate is even more frightening.

I did the math: A medium-traffic web application outputting 100 log entries per second, averaging 100 bytes per entry, generates in a day:

100 entries/sec 脳 86400 sec 脳 100 bytes 鈮?864MB/day 鈮?25GB/monthIf you have 10 such containers, they can fill up a 100GB disk in less than a month. And that’s a conservative estimate.

Scenarios Most Prone to This Issue

From my own and friends’ experiences, these situations are most dangerous:

1. Application Log Level Too Low

During development, for debugging convenience, you set the log level to INFO or even DEBUG, then forget to change it back when deploying to production. As a result, every HTTP request prints a bunch of debug information, and logs grow like crazy.

I’ve seen the most extreme case: a Node.js service had request body logging enabled, and every time a user uploaded an image, it would write the entire base64 string to the log. One request generated several MB of logs. In less than three days, a 200GB disk was full.

2. Program Enters Error Loop

This is even more deadly. If your application gets stuck in an infinite loop due to a bug, continuously throwing exceptions and stack traces, the log file growth rate can be terrifying.

Once, one of our microservices had a database connection pool configuration issue, retrying connections hundreds of times per second, each time printing the complete exception stack. In 3 hours, one container’s logs went from 2GB to 120GB, directly crashing the disk.

3. Long-Running Production Containers

If your containers run continuously in production for months or even years without log rotation configured, the accumulated log files can be substantial.

I once inherited a project with an Nginx container that had been running for 8 months, and the log file had reached 150GB. Every time I used docker logs to view logs, it would freeze for a while because Docker had to read this massive file.

4. High-Traffic Applications

High-traffic applications naturally generate more logs. A website with millions of daily pageviews, even if each request only prints one normal access log line, accumulates to astronomical figures.

The key is many people don’t realize this issue until one early morning when the disk fills up and the system crashes, only then discovering Docker logs are such a big pitfall.

Emergency Cleanup: Immediately Free Up Disk Space

Alright, now the disk is full, services are down, and your boss is frantically @-ing you in the group. Don’t panic at this moment 鈥?first free up the space.

Step 1: Find the Culprit

You need to know which log files are the largest. Run this command:

find /var/lib/docker/ -name "*.log" -exec ls -sh {} \; | sort -h -r | head -20This will list the 20 largest log files, sorted by size. You’ll see output like:

82G /var/lib/docker/containers/a1b2c3d4.../a1b2c3d4...-json.log

35G /var/lib/docker/containers/e5f6g7h8.../e5f6g7h8...-json.log

12G /var/lib/docker/containers/i9j0k1l2.../i9j0k1l2...-json.log

...Find the largest ones and note down the container IDs (that long string of characters).

If you want to know where a specific container’s logs are, use this command:

docker inspect --format='{{.LogPath}}' <container_name_or_id>For example:

docker inspect --format='{{.LogPath}}' nginx

# Output: /var/lib/docker/containers/abc123.../abc123...-json.logStep 2: Safely Clear the Logs

Here’s the key: Never directly rm delete log files!

I made this mistake initially, directly rm -f xxx-json.log, and Docker was completely confused. Because the Docker process still holds a file handle to this file, after you delete it, Docker still thinks the file is there and continues writing to it, but that disk space isn’t actually released.

The correct approach is to clear the file content, not delete the file.

Method 1: Clear Using cat Command

cat /dev/null > $(docker inspect --format='{{.LogPath}}' <container_id>)This command writes empty content into the log file. The file remains, but its size becomes 0. The Docker process won’t have any awareness and will continue working normally.

For example, clearing nginx container logs:

cat /dev/null > $(docker inspect --format='{{.LogPath}}' nginx)Method 2: Use truncate Command (Recommended)

truncate -s 0 $(docker inspect --format='{{.LogPath}}' <container_id>)truncate is more direct, specifically designed for truncating files. -s 0 means set the file size to 0 bytes.

Clearing nginx container logs:

truncate -s 0 $(docker inspect --format='{{.LogPath}}' nginx)Method 3: Batch Clear All Container Logs

If all your container logs are large and you want to clear them all at once, use this command:

sudo sh -c 'truncate -s 0 /var/lib/docker/containers/*/*-json.log'Warning: This command will clear all logs from all containers! Use with caution! Before using it, make sure you don’t need to keep any historical logs.

I generally only use this in emergency situations. Normally, I recommend clearing individually to avoid accidentally deleting important logs.

Verify the Effect

After cleaning up, run df -h to check disk space:

df -h /var/lib/docker/You should see the usage rate drop significantly. If it doesn’t drop, it might be because some containers are still frantically writing logs, and you need to solve that application’s problem first (like disabling DEBUG logs or fixing bugs causing error loops).

After I cleaned up that 82GB log file, disk usage dropped from 100% to 60% immediately, and services recovered right away. But I knew clearly: this was just treating the symptoms, not the root cause. To completely solve the problem, you need to configure log rotation.

Root Solution: Configure Log Rotation

Emergency cleanup can only address the immediate crisis. To completely solve the problem, you need to let Docker automatically manage log size and not let it grow indefinitely.

Global Configuration: Modify daemon.json

The simplest and most commonly used method is to set log rotation parameters in Docker’s global configuration file.

Configuration file location: /etc/docker/daemon.json

If the file doesn’t exist, create one. Then add this content:

{

"log-driver": "json-file",

"log-opts": {

"max-size": "10m",

"max-file": "3",

"compress": "true"

}

}Let me explain these parameters:

- max-size: Maximum size of a single log file. When it reaches this size, it will rotate (create a new file)

- max-file: Maximum number of log files to keep. Exceeding this number will delete the oldest ones

- compress: Whether to compress old log files after rotation, saving space

Under this configuration, each container will occupy at most 10MB 脳 3 = 30MB of log space (not counting compression).

Production Environment Recommended Values:

Based on my experience and industry practices, production environments can be set like this:

{

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3",

"compress": "true"

}

}max-size: "100m" provides more log retention space, convenient for troubleshooting. max-file: "3" keeps 3 files, equivalent to the most recent 300MB of log history.

You can also adjust based on actual circumstances:

- Low-log-volume applications:

max-size: "10m",max-file: "3" - Medium-log-volume applications:

max-size: "50m",max-file: "3" - High-log-volume applications:

max-size: "100m",max-file: "5"

Important Reminder: All configuration values must be enclosed in quotes because Docker requires string format. Writing "max-size": 10m (without quotes) will cause an error.

Restart Docker to Apply Configuration

After modifying daemon.json, you must restart the Docker service:

sudo systemctl restart dockerAfter restarting, Docker will load the new configuration. But 鈥?key point 鈥?this configuration only takes effect for newly created containers, existing running containers won’t be affected!

So what to do? You need to rebuild the containers.

If using docker run to start containers:

docker stop <container_name>

docker rm <container_name>

docker run [original parameters] <image>If using docker-compose:

docker-compose down

docker-compose up -ddown will stop and delete containers, up -d will recreate them with the new configuration. At this point, new containers will apply the log rotation configuration.

Configure Individual Containers

Sometimes you don’t want to change the global configuration, just want to limit logs for a particularly chatty container. You can specify it when starting the container:

Using docker run:

docker run -d \

--name my-app \

--log-opt max-size=10m \

--log-opt max-file=3 \

nginx:latestUsing docker-compose.yml:

version: '3.8'

services:

web:

image: nginx:latest

logging:

driver: "json-file"

options:

max-size: "10m"

max-file: "3"

compress: "true"The advantage of this approach is flexibility 鈥?different containers can have different log policies. For example:

- Nginx container can keep more logs:

max-size: "50m",max-file: "5" - A less important scheduled task container:

max-size: "5m",max-file: "2"

Verify Configuration is Effective

How to confirm the configuration actually took effect? Use docker inspect to view the container’s log configuration:

docker inspect <container_name> | grep -A 10 LogConfigYou’ll see output like:

"LogConfig": {

"Type": "json-file",

"Config": {

"max-file": "3",

"max-size": "10m",

"compress": "true"

}

}If you see an empty object {}, it means the container is still using the default configuration (unlimited growth), and you need to rebuild the container.

After I finished configuring, I specifically wrote a small script to check all containers’ log configurations, ensuring there were no stragglers. Since then, I’ve never been troubled by disk-filling log issues again.

Log Driver Selection Guide

Everything we’ve discussed so far is based on the default json-file driver. But Docker actually supports several log drivers, each with its own purpose.

You might ask: Which one should I use?

json-file (Default Driver)

This is Docker’s default choice.

Pros:

- Supports

docker logscommand, very convenient for troubleshooting - Simple configuration, just add

max-sizeandmax-file - Local storage, fast access

Cons:

- Doesn’t rotate by default, easy to fill up disk (this is also the main problem we’re discussing today)

- Logs are stored on the host machine, if container is deleted logs are gone too

Applicable Scenarios: Most scenarios. As long as log rotation is properly configured, this driver is sufficient.

Recommended Configuration:

{

"log-driver": "json-file",

"log-opts": {

"max-size": "50m",

"max-file": "3",

"compress": "true"

}

}local Driver (Recommended)

If you’re using Docker 18.09 or newer, I highly recommend this one.

Pros:

- Automatic rotation, limits log size by default without manual configuration

- More efficient file format, better performance than json-file

- Also supports

docker logscommand

Cons:

- Requires Docker 18.09+

- Log format is binary, can’t directly

catto view (but you generally don’t need to)

Applicable Scenarios: Strongly recommended for production environments. Worry-free, good performance.

Configuration Method:

{

"log-driver": "local",

"log-opts": {

"max-size": "100m",

"max-file": "3"

}

}I now use this driver for new projects, it’s indeed more convenient than json-file.

journald Driver

If your server uses systemd (like Ubuntu 16.04+, CentOS 7+), you can consider this one.

Pros:

- Integrates with system logs, unified management

- Automatic rotation, won’t fill up disk

- Structured logs with metadata (container ID, container name, etc.)

- Supports both

docker logsandjournalctlcommands

Cons:

- Can only be used on systemd systems

- If unfamiliar with journald, there may be a learning curve

Applicable Scenarios: If your operations system is already based on systemd and journald, using this maintains consistency.

Configuration Method:

{

"log-driver": "journald"

}View container logs using journalctl:

journalctl -u docker.service -f CONTAINER_NAME=my-appsyslog Driver

This mainly sends logs to a syslog server.

Pros:

- Can send to remote syslog server for centralized log management

- Supports both TCP and UDP, TCP is more reliable

- If you already have syslog infrastructure, seamless integration

Cons:

- Doesn’t support

docker logscommand (this is a big problem, troubleshooting becomes inconvenient) - May have network latency

- Requires configuring and maintaining syslog server

Applicable Scenarios: Large-scale clusters with mature centralized syslog logging systems.

Configuration Method:

{

"log-driver": "syslog",

"log-opts": {

"syslog-address": "tcp://192.168.1.100:514",

"syslog-facility": "daemon",

"tag": "{{.Name}}/{{.ID}}"

}

}To be honest, if not absolutely necessary, I don’t really recommend using syslog. Because docker logs won’t work, troubleshooting becomes really inconvenient.

My Recommendations

Choose based on your scenario:

| Scenario | Recommended Driver | Reason |

|---|---|---|

| Single machine or small cluster | local or json-file+rotation | Simple and reliable, supports docker logs |

| systemd environment | journald | Integrates with system logs, unified management |

| Large-scale cluster | fluentd/loki + local driver | Centralized logging, but keep local for emergencies |

| Existing syslog | syslog + local driver | Double insurance, both remote + local |

My practice is: use local driver for small projects, use json-file with Loki for centralized logging in large projects, keeping the most recent few hundred MB locally for emergency troubleshooting.

No matter which driver you choose, remember one principle: Always limit local log size. Don’t let it grow indefinitely, or sooner or later it will fill up your disk.

Best Practices and Operations Recommendations

Having solved the immediate problem, let’s discuss long-term operations experience.

Production Environment Configuration Checklist

If you’re responsible for production environments, I suggest going through this checklist:

1. Global Log Configuration

{

"log-driver": "local",

"log-opts": {

"max-size": "100m",

"max-file": "3"

}

}Or use json-file:

{

"log-driver": "json-file",

"log-opts": {

"max-size": "100m",

"max-file": "3",

"compress": "true"

}

}2. Check All Container Configurations

Write a script to check if all containers have log rotation applied:

#!/bin/bash

for container in $(docker ps -q); do

name=$(docker inspect --format='{{.Name}}' $container | sed 's/\///')

logconfig=$(docker inspect --format='{{json .HostConfig.LogConfig}}' $container)

echo "Container: $name"

echo "Log Config: $logconfig"

echo "---"

doneRun it once to ensure there are no stragglers.

3. Set Up Monitoring and Alerts

Don’t wait until the disk is full to find out. Set up alerts in advance:

- Disk usage > 80%: Warning

- Disk usage > 90%: Critical alert

/var/lib/docker/containers/directory size exceeds threshold: Warning

I use Prometheus + Grafana + Alertmanager, the configuration is quite simple:

- alert: HighDiskUsage

expr: (node_filesystem_size_bytes - node_filesystem_free_bytes) / node_filesystem_size_bytes > 0.8

for: 5m

annotations:

summary: "Disk usage exceeds 80%"4. Regular Inspections

Run log check scripts weekly or monthly to see which containers have the fastest log growth:

# Check Docker directory total size

du -sh /var/lib/docker/

# Check log directory size

du -sh /var/lib/docker/containers/

# Find the largest log files

find /var/lib/docker/containers/ -name "*-json.log" -exec du -h {} \; | sort -h -r | head -10Address anomalies promptly when discovered.

Application Layer Optimization Suggestions

Docker log management is an infrastructure matter, but the application layer also needs to cooperate.

1. Adjust Log Levels

Don’t use DEBUG or INFO levels in production environments, too chatty. Recommendations:

- Production Environment: WARN or ERROR

- Testing Environment: INFO

- Development Environment: DEBUG

This can reduce log volume by at least 80%.

2. Use Dedicated Logging Systems

If your application generates particularly large amounts of logs, don’t expect Docker logs to handle it. You should:

- Have applications write directly to ELK (Elasticsearch + Logstash + Kibana)

- Or use Loki + Grafana (lighter weight)

- Or use cloud vendor log services (Alibaba Cloud SLS, Tencent Cloud CLS, etc.)

Docker logs should only keep a small amount of recent logs for emergency troubleshooting.

3. Structured Logging

Output JSON-formatted structured logs for easier subsequent analysis:

// Bad practice

console.log("User login successful, User ID: " + userId);

// Good practice

console.log(JSON.stringify({

level: "info",

event: "user_login",

userId: userId,

timestamp: new Date().toISOString()

}));Structured logs are easier to search and analyze.

4. Log Sampling

If certain log volumes are really too large (like logging every HTTP request), consider sampling:

// Only log 10% of requests

if (Math.random() < 0.1) {

console.log("HTTP request details...");

}Or only log detailed information when errors occur:

if (error) {

console.log("Detailed request info: ", requestDetails);

}Common Issues FAQ

Q1: Will restarting Docker after daemon.json configuration affect running containers?

A: No. Restarting Docker won’t stop containers, they’ll continue running. But the configuration only takes effect for new containers, existing containers need to be rebuilt.

Q2: Will clearing log files affect application operation?

A: No. The Docker process always holds the file handle, after you truncate the file, Docker continues writing to it, the application is completely unaware.

Q3: If using log-driver=journald, do I still need to configure max-size?

A: No need. journald manages log size itself, has its own rotation mechanism.

Q4: Already configured log rotation, why are logs still large?

A: Check two things:

- Have containers been rebuilt? daemon.json only affects new containers

- Is the application outputting oversized single-line logs? Log rotation is by file size, not line count

Q5: Can I directly delete old log files in /var/lib/docker/containers/ directory?

A: If they’re already rotated old files (like xxx-json.log.1.gz), you can delete them. But don’t delete the current xxx-json.log, use truncate to clear it.

Summary of Precautions

Finally, summarize a few key points to avoid pitfalls:

- daemon.json configuration only affects new containers, existing containers need rebuilding

- Configuration parameters must have quotes, like

"max-size": "10m"not"max-size": 10m - truncate is safe, rm is dangerous, clear logs with truncate, don’t delete files

- Different log drivers support docker logs differently, syslog doesn’t support it, json-file and journald do

- Must restart Docker after modifying daemon.json, use

systemctl restart docker - Regularly check log configurations, prevent newly deployed containers from forgetting configuration

Having said all this, the core message is just one sentence: Configure in advance, check regularly, don’t wait until the disk explodes to put out fires.

Conclusion

Back to that 3 AM alert at the beginning of the article.

At the time, I spent half an hour cleaning logs, 2 hours configuring log rotation and monitoring, then rebuilt all containers. Struggled until 6 AM, finally got it done.

But this incident also taught me a lesson: Docker log management absolutely cannot be taken lightly. It’s like a time bomb 鈥?you can’t see it normally, but once it goes off, it’s big trouble.

To summarize the core points of this article:

In Emergency: Use truncate to clear large log files, immediately free up space, let services recover.

For Prevention: Configure log rotation in daemon.json (max-size + max-file), choose the right log driver (recommend local or json-file), rebuild all containers to apply configuration.

For Long-term Operations: Set up disk monitoring alerts, regularly check log configurations, optimize application log output.

If you’re currently using Docker, whether for development or production environments, I suggest you immediately check:

- Run

find /var/lib/docker/ -name "*.log" -exec ls -sh {} \; | sort -h -r | head -10to see if there are oversized log files - Check if

/etc/docker/daemon.jsonhas log rotation configured - Use

docker inspectto confirm all containers have log limits applied

Don’t wait until that moment when you’re woken up by alerts at dawn to realize this problem.

If this article helped you, welcome to share it with more friends who might step on this pitfall. Let’s all step on fewer pitfalls and get more sleep together.

FAQ

Why do Docker log files fill up my disk?

All stdout/stderr output goes to json.log files which grow indefinitely.

Example:

• A medium-traffic app can generate 25GB/month per container

• Real production case: single container log reached 82GB

How do I configure Docker log rotation?

• Add `"log-driver": "json-file"`

• Add `"log-opts": {"max-size": "10m", "max-file": "3"}`

Restart Docker: `systemctl restart docker`

Rebuild containers to apply. Keeps 3 files, 10MB each.

How do I emergency clean large log files?

`truncate -s 0 /var/lib/docker/containers/*/*-json.log`

Or find large files:

`find /var/lib/docker/ -name "*.log" -size +1G`

Don't delete files - truncate is safer. Then configure rotation to prevent recurrence.

Will clearing log files affect running containers?

Docker continues writing to truncated file. Application is completely unaware.

Safe to truncate even while container is running.

Do I need to rebuild containers after configuring log rotation?

Existing containers need to be rebuilt:

• `docker-compose up -d --force-recreate`

• Or recreated to apply log rotation settings

What log drivers are available?

• json-file (default, supports docker logs)

• local (better performance)

• journald (systemd integration)

• syslog (external syslog)

• none (disable logging)

For most cases, json-file or local with rotation is recommended.

How much disk space do Docker logs typically use?

Example:

• 100 logs/sec × 100 bytes = 864MB/day ≈ 25GB/month per container

• With 10 containers, can fill 100GB disk in less than a month

Always configure log rotation in production.

15 min read · Published on: Dec 18, 2025 · Modified on: Feb 5, 2026

Related Posts

AI Keeps Writing Wrong Code? Master These 5 Prompt Techniques to Boost Efficiency by 50%

AI Keeps Writing Wrong Code? Master These 5 Prompt Techniques to Boost Efficiency by 50%

Cursor Advanced Tips: 10 Practical Methods to Double Development Efficiency (2026 Edition)

Cursor Advanced Tips: 10 Practical Methods to Double Development Efficiency (2026 Edition)

Complete Guide to Fixing Bugs with Cursor: An Efficient Workflow from Error Analysis to Solution Verification

Comments

Sign in with GitHub to leave a comment