The Complete Guide to Docker Resource Limits: Stop Memory Leaks from Crashing Your Server

3 AM. The piercing sound of my phone’s alert jolted me from sleep. Groggily unlocking the screen—“Server unresponsive”, “CPU 100%”, “SSH connection timeout”. A chill ran down my spine.

Stumbling out of bed in the dark, I fired up my laptop. The VPN took three attempts to connect. I typed the ssh command into the terminal—timeout. Tried again—still timeout. My heart sank: the server was completely frozen.

No choice but to hard reboot. Five minutes later, the server finally came back online. I immediately checked the logs. What I found sent a cold sweat down my back: a container that had been running for six months had a memory leak, ballooning from an initial 500MB to 16GB, completely exhausting the server’s memory. SSH couldn’t even respond. Worse, three other perfectly healthy containers went down with it—production environment completely toast.

To be honest, this scenario is all too common in the Docker community. In 2024, Docker version 27.0.3 exposed a serious memory leak bug that caused the Linux kernel’s OOM Killer to take down 68 containers in one fell swoop. You might think: “I’m only running a few containers, surely this won’t happen to me?” Trust me, I thought the same thing.

This article isn’t about “how to prevent application memory leaks”—that’s the development team’s job. What we’re tackling is: how to prevent a single container from bringing down the entire server. From cgroups fundamentals to practical usage of --memory and --cpus parameters, to three major monitoring tools—docker stats, cAdvisor, and Prometheus—we’ll cover Docker resource limits comprehensively. After reading this, you’ll at least be able to ensure that when a memory leak appears, the container dies instead of taking down the whole server with it.

"In 2024, Docker version 27.0.3 exposed a serious memory leak bug that caused the Linux kernel's OOM Killer to take down 68 containers in one fell swoop."

Why Do Containers Crash Servers?

Docker has a “feature”—by default, containers have absolutely no resource limits. Sounds pretty free, right? Free enough that a container can consume all the host’s memory and CPU, and then everyone goes down together.

Real case from Docker 27.4.0: Users found the dockerd daemon process growing from a few hundred MB to 8GB over several days, eventually dragging the server to a crawl. You can’t blame Docker for being unstable—the issue is that if you don’t proactively set limits, containers are like runaway horses, consuming whatever resources they want.

At this point, the Linux kernel activates a “killer” mechanism—the OOM Killer (Out Of Memory Killer). When the system runs out of memory, it picks the “most suitable” process to kill and free up memory. How does it choose? The kernel assigns each process a score (oom_score)—higher scores are more likely to be killed. Processes in containers typically have high scores, but Docker daemon is clever and lowers its own OOM priority (oom_score_adj set to -500), so your containers are usually the ones that get axed.

You see containers disappear from docker ps, and checking the logs shows an Exit Code of 137. This number means: 128 + 9 (SIGKILL signal), which in plain English translates to “forcibly killed”. Not a graceful shutdown—straight up executed by the kernel.

Memory leak symptoms look like this:

- Container memory usage skyrockets from hundreds of MB to several GB without stopping

- Server starts heavily swapping (swap partition), disk LED flashing frantically

- Other containers respond slower and slower, eventually crash

- Your monitoring charts show a beautiful upward diagonal line

In 2024, there was a particularly dramatic case from the Storj community: a container’s memory went from normal hundreds of MB all the way to 37GB, nearly crashing an entire storage node. If memory limits had been set in advance, the container would’ve been taken out by OOM Killer at 1GB, and the server would’ve been rock solid.

[Image: Memory leak curve graph]

Prompt: server memory usage graph showing sharp upward spike, red critical zone at 90%, dark background, monitoring dashboard style, high quality

cgroups: The Foundation of Resource Limits

You may have heard that Docker uses “cgroups” to implement resource limits, but what exactly is this thing? Simply put, cgroups (Control Groups) are a Linux kernel feature specifically designed to set resource quotas for process groups—like giving each process a “resource card” with a limited balance that can’t be exceeded.

When Docker creates a container, it automatically creates a cgroup and puts the container’s processes into it. When you run docker run -m 512m nginx, Docker is actually writing a value to the memory.limit_in_bytes file in the /sys/fs/cgroup/memory/docker/<container-ID>/ directory: 536870912 (512MB in bytes). The kernel reads this file and knows this container can use at most 512MB—exceed it and it gets killed.

cgroups v1 vs v2 differences:

- v1: Splits memory, CPU, disk I/O into independent subsystems, like scattered departments managing their own affairs

- v2: Unified management, clearer hierarchy, better suited for containers that need holistic control

- Older systems (like RHEL 7) still use v1, newer systems (Ubuntu 20.04+, RHEL 8+) have mostly switched to v2

If you’re curious what a container’s actual cgroup configuration looks like, you can check like this:

# Find the container's full ID

docker inspect --format='{{.Id}}' my_container

# Check memory limit (cgroups v1)

cat /sys/fs/cgroup/memory/docker/<container-ID>/memory.limit_in_bytes

# Check CPU quota (cgroups v1)

cat /sys/fs/cgroup/cpu/docker/<container-ID>/cpu.cfs_quota_usThe first time I saw these files, I was pretty confused: how is resource limiting implemented through the filesystem? Later I understood—this is Linux’s “everything is a file” philosophy. The kernel exposes cgroup configuration as files, Docker writes values to files, the kernel reads files to enforce limits. Pretty elegant design.

[Image: cgroups hierarchy diagram]

Prompt: Linux cgroups hierarchy diagram, containers grouped under docker cgroup, memory and CPU subsystems, tree structure, technical illustration, clean design, high quality

Complete Memory Limit Parameters

Docker has a bunch of memory parameters, but only a few are commonly used. Let’s break them down one by one.

1. --memory / -m (Hard Limit, Most Critical)

This is the lifesaver parameter. When a container’s memory reaches this value, OOM Killer is triggered and the container gets killed. The minimum is 6MB (though you can’t really run much), but in production you’d want at least a few hundred MB.

# Limit container to max 512MB memory

docker run -m 512m nginx

# Can also use GB units

docker run -m 2g my-appHow to set it appropriately? My experience: load test your application’s normal memory usage, then multiply by 1.2 to 1.5. For example, if the app normally uses 300MB, setting 400-450MB is pretty safe. Set it too low and containers get killed frequently, set it too high and you lose the protective effect.

2. --memory-swap (Swap Space, Easily Misunderstood)

This parameter is confusing—many people don’t understand it. It sets the total of memory + swap, not the swap size alone.

# 512MB memory + 512MB swap (total 1GB available)

docker run -m 512m --memory-swap 1g nginx

# Disable swap (memory only)

docker run -m 512m --memory-swap 512m nginx

# Allow unlimited swap (dangerous!)

docker run -m 512m --memory-swap -1 nginxIf you don’t set --memory-swap, the default behavior is: swap = memory, meaning total available memory is twice the memory value. For example, a -m 512m container can actually use up to 1GB (512m memory + 512m swap).

Production recommendation: Either disable swap (--memory-swap equals --memory), or limit swap to no more than half of memory. Don’t set it to -1, or the container will thrash the swap and drag down the hard drive.

3. --memory-reservation (Soft Limit)

This is an “elastic quota”. When the server has enough memory, the container can exceed this value; when memory is tight, the kernel tries to compress the container back below this value. It must be less than --memory.

# Soft limit 750MB, hard limit 1GB

docker run -m 1g --memory-reservation 750m nginxUse case: Some applications occasionally consume lots of memory for short periods (like batch processing), but normally use little. Setting a soft limit makes resources more flexible.

4. --kernel-memory (Kernel Memory, Use with Caution)

Limits kernel memory used by the container (like network buffers, filesystem cache)—this memory can’t be swapped. Honestly, unless you know exactly what you’re doing, don’t touch this parameter—set it wrong and the container won’t even start.

5. --oom-kill-disable (Dangerous Parameter, Pay Attention)

Disables OOM Killer so the container won’t be killed when memory exceeds limits. Sounds good? Dead wrong. If container memory runs wild and it won’t be killed, the result is the entire server’s memory gets exhausted.

# This will cause problems! (Container can consume unlimited memory)

docker run --oom-kill-disable nginx

# If you must use it, you MUST set memory limits

docker run -m 512m --oom-kill-disable nginxWhen would you use this parameter? Almost never. Unless you have a special application that must guarantee processes won’t be suddenly killed (like database checkpoint processes), and you can ensure the app will control its own memory.

Real case: An AWS user crashed their EC2 instance. After investigating, they found a container without memory limits had consumed 30GB, making the instance completely unresponsive. After adding -m 2g, when the container hits the limit it just crashes and restarts—the server stays rock solid.

[Image: Memory parameter relationship diagram]

Prompt: Docker memory parameters diagram, showing memory and swap relationship, visual chart with bars and labels, technical illustration, blue and orange colors, high quality

Complete CPU Limit Parameters

CPU limits are gentler than memory limits—exceeding them won’t get you killed, just throttled. But runaway CPU can still freeze up a server.

1. --cpus (Most Intuitive Method)

Directly specify how many CPU cores the container can use, supports decimals.

# Max 1.5 CPU cores

docker run --cpus="1.5" nginx

# Only half a core

docker run --cpus="0.5" my-appThis parameter is implemented under the hood via --cpu-period and --cpu-quota—Docker calculates the ratio for you. Setting it to 1.5 means the container can use at most 1.5 cores worth of compute power at any given time—max out 1 core and still use 50% of another.

2. --cpu-shares (Relative Weight, Not Hard Limit)

This parameter sets CPU scheduling priority, default value 1024. The key point: it only takes effect when CPU resources are tight. If the server has plenty of CPU, containers can use as much as they want.

# Container A gets 2x the CPU time of container B

docker run --cpu-shares 2048 --name app_a my-app

docker run --cpu-shares 1024 --name app_b my-appFor example, if a server has only 2 cores both running at full load, the two containers will split CPU in a 2:1 ratio—container A gets about 1.33 cores, container B gets 0.67 cores. But if the server CPU is idle, both containers can run at full speed.

When to use this? When you have multiple containers and want to prioritize certain important services during resource contention (like API services over background tasks).

3. --cpuset-cpus (Pin to Specific Cores)

Pins the container to certain CPU cores, not allowing it to use others.

# Only use cores 0 and 3

docker run --cpuset-cpus="0,3" nginx

# Use cores 2 through 5

docker run --cpuset-cpus="2-5" my-appUse cases:

- NUMA architecture: Multi-socket CPU servers, bind containers to cores on the same CPU socket to reduce cross-socket memory access

- Avoid cache invalidation: Container processes running on fixed cores have higher CPU cache hit rates

- Isolate critical services: Bind important containers to dedicated cores to avoid interference from other containers

I’ve seen a Kubernetes environment where database containers were bound to the back 4 cores of an 8-core server, with the front 4 cores for web services—performance improvement was quite noticeable.

4. --cpu-period and --cpu-quota (Fine-Grained Control)

These are low-level parameters—--cpus is just a wrapper for these two.

--cpu-period: CFS (Completely Fair Scheduler) scheduling period, default 100000 microseconds (100ms)--cpu-quota: In one period, how many microseconds of CPU time the container can use

# In 100ms period, can only use 50ms CPU time (equivalent to 0.5 cores)

docker run --cpu-period=100000 --cpu-quota=50000 nginx

# Equivalent to

docker run --cpus="0.5" nginxMost of the time --cpus is enough, unless you need very fine-grained control (like shortening the period for more frequent scheduling).

Real case: A service had a bug where a container’s thread pool got into an infinite loop, CPU spiked to 800% (server was 8-core). Without limits, the entire server was dragged down, couldn’t even SSH. Later added --cpus="2" limits to all containers—even when this bug occurs it only slows itself down, other containers stay rock solid.

[Image: CPU limit comparison test]

Prompt: CPU usage comparison chart, with and without limits, before/after graph showing CPU spike prevention, performance monitoring dashboard, clean visualization, high quality

Practical Monitoring Solutions

Setting resource limits is just the first step—you also need to know how much resources containers are actually using. Otherwise by the time memory leaks trigger OOM, it’s already too late.

Tool 1: docker stats (Built-in, Zero Cost)

The simplest method, comes with Docker.

# Real-time refresh, Ctrl+C to exit

docker stats

# Output once only, suitable for script calls

docker stats --no-stream

# Only watch specific containers

docker stats nginx_container mysql_containerOutput looks like this:

CONTAINER ID NAME CPU % MEM USAGE / LIMIT MEM % NET I/O

a1b2c3d4e5f6 nginx 0.50% 45.2MiB / 512MiB 8.83% 1.2kB / 0BPros: Works out of the box, nothing to install.

Cons: Only shows current state, can’t review history, no alerts, no visualization. Good for temporary troubleshooting, not suitable for long-term monitoring.

Tool 2: cAdvisor (Google Product, Container Monitoring Expert)

cAdvisor (Container Advisor) automatically detects all containers on the host, collects CPU, memory, network, disk I/O metrics, and provides a web interface plus Prometheus-format metrics endpoint.

Running it is simple:

docker run -d \

--name=cadvisor \

--restart=always \

-p 8080:8080 \

-v /:/rootfs:ro \

-v /var/run:/var/run:ro \

-v /sys:/sys:ro \

-v /var/lib/docker/:/var/lib/docker:ro \

-v /dev/disk/:/dev/disk:ro \

gcr.io/cadvisor/cadvisor:latestAfter starting, access http://server-IP:8080 to see resource usage trend charts for each container. Access http://server-IP:8080/metrics to get Prometheus-format data.

Pros: Professional, comprehensive, supports Prometheus ecosystem.

Cons: Only retains last 2 minutes of data, need to pair with Prometheus for historical trends.

Tool 3: Prometheus + Grafana (Enterprise Solution)

This is a complete monitoring system:

- cAdvisor: Collects container metrics

- Prometheus: Scrapes and stores metric data

- Grafana: Visualization + alerting

Complete docker-compose.yml configuration:

version: '3.8'

services:

cadvisor:

image: gcr.io/cadvisor/cadvisor:latest

container_name: cadvisor

ports:

- "8080:8080"

volumes:

- /:/rootfs:ro

- /var/run:/var/run:ro

- /sys:/sys:ro

- /var/lib/docker/:/var/lib/docker:ro

- /dev/disk/:/dev/disk:ro

restart: always

prometheus:

image: prom/prometheus:latest

container_name: prometheus

ports:

- "9090:9090"

volumes:

- ./prometheus.yml:/etc/prometheus/prometheus.yml

- prometheus_data:/prometheus

command:

- '--config.file=/etc/prometheus/prometheus.yml'

- '--storage.tsdb.path=/prometheus'

restart: always

grafana:

image: grafana/grafana:latest

container_name: grafana

ports:

- "3000:3000"

volumes:

- grafana_data:/var/lib/grafana

environment:

- GF_SECURITY_ADMIN_PASSWORD=admin

restart: always

volumes:

prometheus_data:

grafana_data:Accompanying prometheus.yml:

global:

scrape_interval: 15s

scrape_configs:

- job_name: 'cadvisor'

static_configs:

- targets: ['cadvisor:8080']After deployment:

- Prometheus scrapes data from cAdvisor every 15 seconds

- Access Grafana at

http://server-IP:3000(default account admin/admin) - Add Prometheus data source (address:

http://prometheus:9090) - Import Grafana dashboard template (recommended Dashboard ID: 19908, specifically designed for Docker containers)

Key monitoring metrics:

container_memory_usage_bytes: Container’s current memory usagecontainer_memory_max_usage_bytes: Container’s historical peak memory usagecontainer_cpu_load_average_10s: 10-second average CPU loadcontainer_fs_io_time_seconds_total: Disk I/O time

Set up an alert rule to send email or DingTalk notifications when memory usage exceeds 80%—you can catch issues before they explode.

Honestly, this system takes some effort to set up, but it’s a one-time investment. I now manage 20+ containers all monitored by Grafana, and I haven’t been woken up at night since.

[Image: Grafana container monitoring dashboard]

Prompt: Grafana dashboard showing Docker container metrics, memory and CPU graphs, clean modern UI, dark theme, monitoring panels with colorful charts, high quality

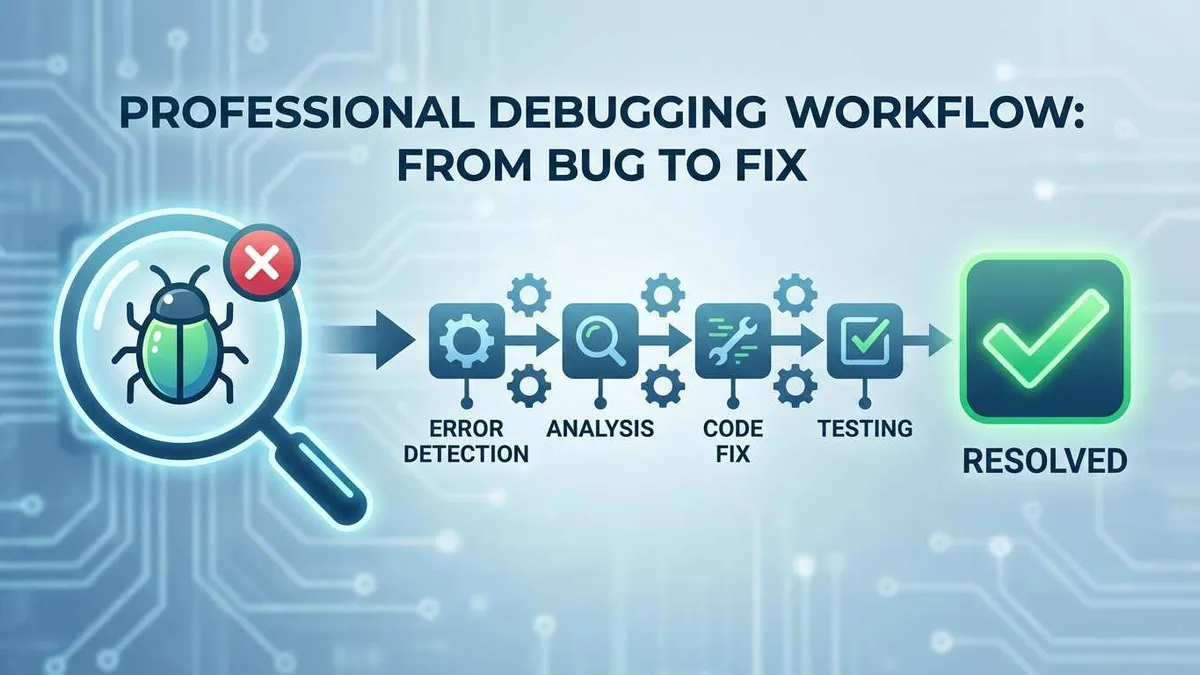

Complete Memory Leak Diagnosis Process

Monitoring alerts go off, or a container mysteriously crashes—how do you quickly pinpoint the problem? Follow this process.

Step 1: Detect Anomaly

First use docker stats to see which container’s memory is growing wildly:

docker stats --no-stream | grep -v "0.00%"Found a container whose memory usage is approaching its limit? Check if it’s been killed by OOM killer recently:

# Check container event logs

docker events --filter 'event=oom' --since '24h'

# Check container's exit status

docker inspect <container-name> --format='{{.State.ExitCode}}'

# If returns 137, it was killed by OOM killerStep 2: Analyze Memory Usage

Enter the container to see which process is consuming memory:

# Enter container

docker exec -it <container-name> /bin/bash

# See process memory ranking (needs top/htop in container)

top -o %MEM

# Or use ps

ps aux --sort=-%mem | head -n 10If the container has a Java application, you can export a heap dump for analysis:

# Find Java process PID

jps

# Export heap dump

jcmd <PID> GC.heap_dump /tmp/heap.hprof

# Copy file out for analysis

docker cp <container-name>:/tmp/heap.hprof ./Step 3: Emergency Response

Problem is already affecting the service, temporary relief first:

# Restart container (will lose temporary data in container)

docker restart <container-name>

# If container is still running, can dynamically adjust memory limit

docker update --memory 1g --memory-swap 1g <container-name>

# Clean up unused resources in system (use carefully, deletes unused images and containers)

docker system prune -aStep 4: Root Cause Solution

Temporary measures just stop the bleeding, proper fix requires these:

- Fix application code: Find the root cause of memory leak (forgotten closed connections, unlimited cache growth, large objects not released, etc.), modify code

- Set resource limits: If not set yet, quickly add

--memoryparameter - Deploy monitoring: Set up Prometheus+Grafana, next time you’ll get alerts before problems explode

- Container auto-restart: Add

--restart=on-failure:3, automatically restart up to 3 times after OOM

Command quick reference:

# View resource limit configuration for all containers

docker ps --format "{{.Names}}" | xargs docker inspect \

--format='{{.Name}}: Memory={{.HostConfig.Memory}} CPU={{.HostConfig.NanoCpus}}'

# View container's historical restart count

docker inspect --format='{{.RestartCount}}' <container-name>

# View container's detailed logs (last 100 lines)

docker logs --tail 100 <container-name>

# View container memory usage details

docker stats --no-stream --format \

"table {{.Name}}\t{{.MemUsage}}\t{{.MemPerc}}" <container-name>Real case review: That 37GB Storj memory leak case, here’s how the user solved it:

- After discovering container memory spike, first

docker restartto temporarily restore service - Added

-m 1glimit to avoid dragging down host again - Exported container logs and heap dump, sent to development team for analysis

- After new version fixed the bug, upgraded image and redeployed

- Deployed cAdvisor monitoring, set alert for memory usage >70%

The whole process was a hassle, but after learning the lesson, similar problems never happened again.

[Image: Memory diagnosis flowchart]

Prompt: flowchart showing memory leak diagnosis process, step by step from detection to resolution, arrows connecting boxes, clean infographic style, blue and green colors, high quality

Best Practices and Pitfall Guide

After all these parameters and tools, let’s summarize how to use them correctly in production.

Production Environment Must-Do Checklist

✅ All containers must have memory limits

Don’t leave it to chance. Even an Nginx static file server should get 512MB. Better to be conservative than wait for trouble to remedy it.

✅ Dev environment simulates production limits

Don’t run containers locally without limits, then discover insufficient memory in production. Set dev environment to 80% of production limits to catch problems early.

✅ Regularly review resource usage

Check docker stats once a month. Some containers’ needs may have changed—add resources where needed, reduce where possible.

❌ Don’t disable OOM killer

Unless you’re 100% certain the app will control its own memory, don’t touch --oom-kill-disable. This is a suicide parameter.

❌ Don’t use unlimited swap--memory-swap -1 looks tempting but is actually planting a bomb in your server. Containers thrashing swap will kill the hard drive—better to let OOM killer handle it quickly.

❌ Don’t set memory limits too low

Limits below app’s actual needs cause frequent OOMs, actually reducing availability. Load test first, then set limits.

Correct Usage in Docker Compose

services:

web:

image: nginx:latest

deploy:

resources:

limits:

cpus: '1.5'

memory: 512M

reservations:

cpus: '0.5'

memory: 256M

restart: on-failure:3Note the deploy field (Docker Compose v3 syntax). limits are hard limits, reservations are soft limits. Container normally uses 256MB, can use up to 512MB when busy.

Batch Managing Multiple Containers

If you have a group of microservice containers and want to set a unified resource pool, you can use cgroup-parent:

# Create a parent cgroup, limit total resources

docker run --cgroup-parent=/my-services -m 2g service-a

docker run --cgroup-parent=/my-services -m 2g service-b

# Two containers share one cgroup, total memory doesn't exceed parent limitUse case: Multiple related containers forming a business unit, want overall resource control.

Resource Limit Experience Formulas

| Application Type | Memory Limit Recommendation | CPU Limit Recommendation |

|---|---|---|

| Nginx static service | 256-512MB | 0.5-1 core |

| Node.js API | 512MB-1GB | 1-2 cores |

| Java microservice | 1-2GB | 2-4 cores |

| Database (MySQL/PostgreSQL) | 2-4GB | 2-4 cores |

| Message queue (RabbitMQ/Kafka) | 1-2GB | 1-2 cores |

These are conservative estimates—actual depends on business volume. During load testing, observe container resource usage peaks, then multiply by 1.5x as the limit value for safety.

Comparison with Kubernetes Resource Management

If you’ve used Kubernetes, you’ll find its requests and limits concepts are similar to Docker parameters:

- requests: Similar to Docker’s

--memory-reservation - limits: Similar to Docker’s

--memory

K8s advantage is resource limit configuration is more standardized (managed uniformly in YAML), Docker has more flexibility (can adjust anytime with docker update).

Final Advice

Resource limits aren’t “set once, valid forever”. Applications change, business volume grows, monitoring data tells you whether to add resources or optimize code. Review regularly, don’t let configuration become decoration.

[Image: Resource limit configuration comparison table]

Prompt: comparison table showing Docker resource limits best practices, checkmarks and crosses, clean infographic style, professional layout, high quality

Conclusion

Back to the opening story: woken by alerts at 3 AM, server crashed by a container. That painful lesson taught me that Docker’s default “freedom” is a trap. If you don’t proactively set limits, you’re handing over life-and-death power over your server to containers.

Looking back now, the defense system is clear:

First line of defense: Resource limits (Prevention)

Set --memory and --cpus for every container, like putting reins on wild horses. When containers go out of control they crash themselves, not dragging down the whole server. This is the most basic and critical step.

Second line of defense: Monitoring and alerts (Detection)

docker stats lets you see current state, cAdvisor+Prometheus+Grafana lets you see trends and history. Alerts when memory usage exceeds 80% can spot issues 48 hours before disaster strikes.

Third line of defense: Diagnosis process (Response)

When problems do occur, follow the process: Detect anomaly → Analyze usage → Emergency response → Root cause solution. Don’t panic, the command cheat sheet is all in this article.

From cgroups fundamentals to --memory-swap parameter details, from Docker Compose configuration to Kubernetes comparison, this article has basically covered everything about Docker resource limits. What’s left is your action.

Do these three things right now:

- Check your production environment, run this command to see which containers don’t have limits:

docker ps --format "{{.Names}}" | xargs docker inspect \

--format='{{.Name}}: Memory={{.HostConfig.Memory}} CPU={{.HostConfig.NanoCpus}}'Containers with Memory=0 are ticking time bombs.

Deploy monitoring solution, copy the docker-compose.yml from this article and run it, done in 30 minutes.

Set a calendar reminder, spend 1 hour once a month checking

docker stats, review whether resource usage is reasonable.

Honestly, I don’t hope you’ll learn the way I did—from 3 AM alerts. Resource limits are something that the earlier you do, the more peace of mind. Don’t wait for trouble before regretting it.

FAQ

Why do Docker containers crash servers?

A single container can consume all host memory and CPU, causing the Linux OOM Killer to terminate processes.

In Docker 27.0.3, a memory leak bug caused 68 containers to be killed simultaneously.

How do I set memory limits for Docker containers?

Or in docker-compose.yml: `mem_limit: 512m`

When container exceeds limit, it's killed by OOM Killer instead of crashing the server.

How do I set CPU limits?

Or use `--cpu-period` and `--cpu-quota` for more precise control.

Prevents CPU exhaustion and ensures fair allocation.

What is OOM Killer and Exit Code 137?

Exit Code 137 (128 + 9) means process was forcibly killed by SIGKILL signal, not graceful shutdown.

How do I monitor Docker container resource usage?

cAdvisor for web UI and historical data

Prometheus + Grafana for production monitoring with alerts

Monitor memory, CPU, and network usage regularly.

What are recommended resource limits?

• Node.js API (512MB-1GB, 1-2 cores)

• Java microservice (1-2GB, 2-4 cores)

• Database (2-4GB, 2-4 cores)

During load testing, observe peak usage and multiply by 1.5x for safety margin.

Can I update resource limits for running containers?

Changes take effect immediately.

For docker-compose, update YAML and run `docker-compose up -d`.

17 min read · Published on: Dec 18, 2025 · Modified on: Feb 5, 2026

Related Posts

AI Keeps Writing Wrong Code? Master These 5 Prompt Techniques to Boost Efficiency by 50%

AI Keeps Writing Wrong Code? Master These 5 Prompt Techniques to Boost Efficiency by 50%

Cursor Advanced Tips: 10 Practical Methods to Double Development Efficiency (2026 Edition)

Cursor Advanced Tips: 10 Practical Methods to Double Development Efficiency (2026 Edition)

Complete Guide to Fixing Bugs with Cursor: An Efficient Workflow from Error Analysis to Solution Verification

Comments

Sign in with GitHub to leave a comment